U.S. Department of Transportation

Federal Highway Administration

1200 New Jersey Avenue, SE

Washington, DC 20590

202-366-4000

Federal Highway Administration Research and Technology

Coordinating, Developing, and Delivering Highway Transportation Innovations

| REPORT |

| This report is an archived publication and may contain dated technical, contact, and link information |

|

| Publication Number: FHWA-HRT-15-072 Date: December 2016 |

Publication Number: FHWA-HRT-15-072 Date: December 2016 |

Nearly all transportation data can be associated with a geographic location. Therefore, GIS technology, which allows data collected by different sources to be displayed together on a common map, is seen as a primary data integration tool. Most, if not all, of the examples cited in the previous chapter use GIS as the platform for integrating, analyzing, and visualizing transportation data based on locational proximity.

A key factor in determining how well transportation data can be integrated is the locational referencing method used to describe where each transportation data item is located. The following are the three principal locational referencing method typologies, with each typology having numerous variations:

Another critical component needed for integrating transportation data using GIS technology is the geospatial database representing the physical roadway network itself. Typically, most roadway networks are depicted geospatially as linear features, where each line segment represents the approximate centerline of the roadway (or directional travel way for physically separated divided highways). The positional accuracy of the line representing the roadway centerline can vary significantly between different databases, although most State transportation agencies (and commercial navigation data developers) currently are maintaining roadway centerline databases that have positional accuracies ranging from 10 to 40 ft of ground truth. This level of positional accuracy is generally adequate for locating, displaying, and linking most transportation-related attributes, features, and events to the roadway centerline and through the roadway centerline to one another.

Roadway centerline databases also vary significantly with respect to which roads are included and what attribute data are included for each road segment. Historically, roadway databases developed by State transportation agencies include centerline geometry and attributes only for those roads that were specifically maintained by the State or that were required for Federal reporting purposes (e.g., HPMS). Relatively few State transportation agencies maintain a complete, integrated centerline database for all public roads, and none of them routinely update roadway attribute data on roads that are maintained by other agencies. Commercial navigation databases do include all public (and even some private) roads and update key roadway attributes needed for vehicle navigation and routing (e.g., one-way streets, vehicle restrictions, speeds, etc.) through a combination of primary data collection and data sharing with State and local government agencies, and even private freight transportation providers (e.g., UPS®, FedEx®).

To provide a common framework for integrating transportation data, the roadway centerline database must include certain specific attributes that facilitate translation between the various referencing methods described earlier in this section. To integrate State road inventory and condition data, the roadway centerline database must include, for each road segment feature, a route identifier and linear measures corresponding to the start and end points of the road segment along the specified route. If roadway attribute data are maintained using more than one LRS, similar attributes must be included for each LRS. To integrate traffic data that are geographically referenced using TMC codes, each road segment feature must also include the associated TMC code representing its location. While multiple road segments may share the same TMC code (e.g., a long stretch of highway between interchanges, or segments representing individual ramps within an interchange), road segments should be split wherever the TMC code changes.

Common geospatial editing and analysis functions can be used to link some data items to the roadway centerline database based on spatial proximity. For example, roadway data referenced using geographic coordinates can be “snapped” to the nearest point on the roadway centerline database. That point on the roadway centerline can be translated into a milepoint measure on a specific route, enabling its display and analysis together with other linear referenced attribute data. The critical assumption in using spatial proximity is that the positional accuracies of both the coordinate referenced data item and the roadway centerline alignment are close enough to ensure that any spatial search finds the correct match. As the relative positional accuracies of either spatial feature decline, the likelihood of matching the data item to the wrong roadway centerline increases, requiring additional validity checks, and more time to complete them.

Another consideration in using roadway centerline databases as the common framework for integrating transportation data is whether a centerline depiction provides sufficient feature resolution for its intended application. Typically, a roadway centerline database does not display or explicitly identify individual travel lanes unless they are physically separated by a median or other barrier. This means that information on events or incidents that affect one or more but not all lanes of a roadway (e.g., a crash or roadway construction that blocks the outside travel lane) cannot be explicitly displayed using just a roadway centerline network. There are methods available to graphically display roads with multiple concurrent lanes, but to accurately locate lane-level features and events requires additional locational attributes. For example, commercial navigation databases include lane-specific information using a standard lane identification convention (e.g., lanes are numbered consecutively, beginning with the inside travel lane (leftmost, in the direction of travel) as lane one, and proceeding outward). These conventions are used in RITIS to display detailed traffic volumes at the individual lane level.

Geospatial, a Web portal for sharing geographic information (http://www.data.gov/geospatial/) developed by the Federal Government in 2003, also faced many organizational issues.(56) Geospatial is a public gateway for improving access to geospatial information and data under the Geospatial e-government initiative. Geospatial’s Web portal unifies geospatial data found among 69 Federal and 79 State and local government clearinghouses by using behind-the-scenes search tools to find and display data. Geospatial orchestrates new practices for data-sharing and system interoperability by developing an open system standard that defines a Web-enabled geospatial architecture. Metadata describing the geospatial data on the clearinghouses follow a standard reporting framework to make the information accessible to the Geospatial search tools.

The importance of temporal referencing and resolution for integrating transportation data depends heavily on the specific application and the data being integrated. Most of the roadway inventory data maintained by State transportation agencies is temporally stable and may be updated only once a year or at the conclusion of a specific highway maintenance or construction project.

By contrast, traffic conditions change continuously. The frequency with which traffic condition data are updated depends on the methods by which the data are collected and transmitted, how they are processed, and the purposes for which the data are being used. For example, traffic operations centers require near real-time streaming of actual traffic conditions (i.e., volumes, incident locations, etc.) to enable traffic managers to take tactical corrective actions and to see how traffic responds to those actions. Transportation planners, however, typically want traffic data that have been summarized over various time periods (e.g., by 5-min periods over a day, daily over a week or month, or simply AADT). Creating these summaries requires substantial data storage capacity; automated procedures to select, extract, process, and display data of interest; and updating procedures to add new data to the repository on a continuous basis.

Integration of geospatially referenced data having different temporal resolution requires the development of data aggregation and visualization procedures, in close coordination with the end users, for how (near) real-time data will be summarized and displayed. For example, some users may want to view a historical replay of traffic conditions at 5-min intervals for a specific day. Other users may want to produce graphs showing the average hourly weekday traffic volumes and variances along a specific roadway over the past year. Still other users may simply want to update the AADT data items for all roads based on traffic volumes collected over the past year.

To support these diverse user requirements, data storage formats need to be established that enable efficient selection, extraction, and summarization of individual traffic data records. At a minimum, the data formats should standardize both geospatial and temporal references. For geospatial referencing, either geographic coordinates or TMC codes should be sufficient, provided that TMC codes are attached to the geospatial roadway centerline database. For temporal referencing, standardized representation of dates (e.g., YYYYMMDD) and time (e.g., HHMMSS, 24-h clock) should be attached to each data record. If raw incoming data are not transmitted in the standardized format, they need to be converted as part of the extract, transform, and load procedure.

Quality control procedures are necessary if archived data are to be usable for a variety of applications. The quality of archived data from traffic operations systems has been influenced by the following two prevailing issues:

The result has been that some managers and users of data archived from traffic operations have wrestled with data quality problems. The following quality assurance strategies are the most important when using archived operations data:

Traffic Data Quality Measurement is an excellent source for methods and tools that enable traffic data collectors and users to determine the quality of traffic data they are providing, sharing, and using.(57) The report presents a framework of methodologies for calculating the data quality metrics for different applications. The report also presents guidelines and standards for calculating data quality measures to address the following key traffic data quality issues: defining and measuring traffic data quality, quantitative and qualitative metrics of traffic data quality, acceptable levels of quality, and methodology for assessing traffic data quality.

The framework is developed based on the six recommended fundamental measures of traffic data quality defined as follows:(58)

Table ES-1, taken from the FHWA report Traffic Data Quality Measurement and reproduced as table 5, shows the range of data consumers, types of data, and possible applications that are considered in developing the framework. Table 6 indicates example data quality targets.

| Data Consumers | Type of Data | Applications or Users |

|---|---|---|

| Traffic operators (of all stripes) |

|

|

| Archived data administrators | Original source data | Database administration |

| Archived data users (planners and others) |

|

|

| Traffic data collectors |

|

|

| Information service providers | Original source data (real time) | Dissemination of traveler information |

| Travelers | Traveler information | Pre-trip planning |

| Applications | Purpose | Data | Accuracy | Completeness | Validity | Timeliness | Typical Coverage |

|---|---|---|---|---|---|---|---|

| Transportation planning applications | Standard demand forecasting for long-range planning | Daily traffic volumes |

|

At a given location 25 percent—12 consecutive hours of 48-h count |

|

Within 3 years of model validation year |

|

| Transportation planning applications | Highway performance monitoring system | AADT |

|

|

|

Data 1 year old or less |

|

| Transportation operations | Traveler information | Travel times for entire trips or portions of trips over multiple links | 10- to 15-percent root mean squared error | 95- to 100-percent valid data | Less than 10‑percent failure rate | Data required close to real time | 100-percent area coverage |

| Highway safety | Exposure for safety analysis | AADT and VMT by segment |

|

|

|

Data 1 year old or less |

|

| Pavement management | Historical and forecasted loadings | Link vehicle class |

|

|

|

Data 3 years old or less |

|

Metadata are typically used to determine the availability of certain data, determine the fitness of data for an intended use, determine the means of accessing data, and enhance data analysis and interpretation by improved understanding of the data collection and processing procedures. Metadata provide the information necessary for data to be understood and interpreted by a wide range of users. Thus, metadata are particularly important when the data users are physically or administratively separated from the data producers. Metadata also reduce the workload associated with answering the same questions from different users about the origin, transformation, and character of the data. The use of metadata standards and formats helps to facilitate the understanding, characteristics, and usage of the data.(58)

ASTM has published metadata standards for ITS data in ASTM E2468-05, “Standard Practice for Metadata to Support Archived Data Management Systems.” (59) As stated on the ASTM Web site, “This standard is applicable to various types of operational data collected by ITSs and stored in an ADMS. Similarly, the standard can also be used with other types of historical traffic and transportation data collected and stored in an archived data management system.” (60)

This standard adopts the Federal Geographic Data Committee’s (FGDC) existing Content Standard for Digital Geospatial Metadata (FGDC-STD-001-1998) (with minimal changes) as the recommended metadata framework for ADMSs.(60) The FGDC metadata standard was chosen as the framework because of its relevance and established reputation among the spatial data community. A benefit of using the FGDC standard is the widespread availability of informational resources and software tools to create, validate, and manage metadata (see https://www.fgdc.gov/ metadata/index_html).(61)

Because the ASTM E2468-05 metadata standard is based on the FGDC standards for geographic metadata, the components of the nongeographic metadata structure, as provided in the following list, are the same except for those related to spatial data:

Additional metadata standards include the following:

Each of these standards and their requirements is discussed in the following paragraphs.

According to Executive Order 12096, all Federal agencies are ordered to use the Content Standard for Digital Geospatial Metadata (CSDGM) Version 2 (FGDC-STD-001-1998) to document geospatial data created as of January 1995.(60) Many State and local governments have adopted this standard for their geospatial metadata as well.

The international community, through the ISO, has developed and approved an international metadata standard, ISO 19115. As a member of ISO, the United States is required to revise the CSDGM in accordance with ISO 19115. Each nation can craft its own profile of ISO 19115 with the requirement that it include the 13 core elements. The FGDC is currently leading development of a U.S. profile of the international metadata standard ISO 19115.(62) The ISO 19115 core metadata elements are listed in table 7.

| Element Category | Element Name |

|---|---|

| Mandatory | Dataset title |

| Dataset reference date | |

| Dataset language | |

| Dataset topic category | |

| Abstract | |

| Metadata point of contact | |

| Conditional | Dataset responsible party |

| Geographic location by coordinates | |

| Dataset character set | |

| Spatial resolution | |

| Distribution format | |

| Spatial representation type | |

| Lineage statement | |

| Online resource | |

| Metadata file identifier | |

| Metadata standard name | |

| Metadata standard version | |

| Metadata language | |

| Metadata character set |

The U.S. FEA is an initiative of the U.S. Office of Management and Budget that aims to comply with the Clinger-Cohen Act and provide a common methodology for IT acquisition in the United States Federal Government.(63) It is designed to ease sharing of information and resources across Federal agencies, reduce costs, and improve citizen services.(64)

The following five models comprise the FEA:

The Minnesota Department of Transportation (MnDOT) standards for metadata elements were established by the State’s Data Governance Work Team prior to implementation of data governance in 2011. The metadata standards were formally adopted by the Business Information Council in November 2009. The mandatory metadata elements and definitions (see table 8) are based on the Dublin Core Metadata Element Set and the Minnesota recordkeeping Metadata Standard. These elements should be applied at the table level at a minimum. Ideally, they should be applied at the column level based on the customer or business need.

| Element | Definition | Table Level | Column Level |

|---|---|---|---|

| Title | The name given to the entity. | X | X |

| Point of Contact | The organizational unit that can be contacted with questions regarding the entity or accessing the entity. | X | — |

| Subject | The subject or topic of the entity, which is selected from a standard subject list. | X | — |

| Description | A written account of the content or purpose of the entity. Accuracy or quality descriptions may also be included. | X | X |

| Update Frequency | A description of how often the record is updated or refreshed. | X | — |

| Date Updated | The point or period of time, which the entity was updated. | X | — |

| Format | The file format or physical form of the entity. | — | X |

| Source | The primary source of record from which the described resource originated. | X | — |

| Lineage | The history of the entity; how it was created and revised. | X | — |

| Dependencies | Other entities, systems, and tables that are dependent on the entity. | X | — |

| — Indicates the element is not applicable at this level. | |||

The Caltrans Library uses the MARC 21 metadata standard CONTENTdm®, which uses the Dublin Core American National Standards Institute (ANSI) standard.(65) The GIS library has been through numerous iterations, but in general, there is still quite a bit of information without metadata, and it is becoming an issue. Caltrans Earth (an application that uses a Google Earth™ plugin) will not allow data without metadata; ISO 19115 Geographic Information—Metadata is used.(62) The Data Retrieval System (DRS) requires some metadata and is system dependent. Each metadata item has core elements and some that are specific to category. The metadata for the DRS are established by the data administrator and the DRS Steering Committee, usually for search and retrieval purposes.(66)

This document provides requirements for metadata that must be followed by registered users who submit data for the Research Data Exchange (RDE). In terms of standards, metadata must follow ASTM E2468-05, Standard Practice for Metadata to Support Archived Data Management Systems.(59) Metadata content includes seven main sections: identification information, data quality information, spatial data organization information, spatial reference information, entity and attribute information, distribution, and metadata reference information. The document also defines the following roles and responsibilities in metadata management:

In summary, both the ASTM Standard Practice for Metadata to Support Archived Data Management Systems (E2468-05) and a modified Dublin Core3 as the metadata schema have widespread industry acceptance. Either one is recommended for the VDA Framework.(59)

Data migration approaches abandon the effort to keep old technology working or to create substitutes that emulate or imitate it. Instead, these approaches rely on changing the digital encoding of the objects to preserve them while making it possible to access those objects using state-of-the-art technology after the original hardware and software become obsolete.

The following subsections describe a variety of migration strategies described in NCHRP Report 754.(66)

The most direct path for format migration, and one used very commonly, is simple version migration within the same family of products or data types. Successive versions of given formats, such as Corel WordPerfect®’s WPD or Microsoft® Excel’s XLS, define linear migration paths for files stored in those formats. Software vendors usually supply conversion routines that enable newer versions of their product to read older versions.

An alternative to the uncertainties of version migration is format standardization, whereby a variety of data types are transformed to a single, standard type. For example, a textual document, such as a WordPerfect® document, could be reduced to plain ASCII. Obviously, there would be some loss if font, type size, and formatting were significant. However, this conversion is eminently practicable, and it would be appropriate in cases where the essential characteristics to be preserved are the textual content and the grammatical structure. Where typeface and font attributes are important, richer formats, such as PDF or Rich Text Format, could be adopted as standards.

Another approach to migrating data formats into the future is Typed Object Model (TOM) conversion. The TOM approach begins with the recognition that all digital data items are objects, that is, they have specified attributes, specified methods or operations, and specific semantics. All digital objects belong to one or another type of digital object, where “type” is defined by given values of attributes, methods, or semantics for that class of objects. A Microsoft® Word 6 document, for example, is a type of digital object defined by its logical encoding. An electronic mail message is a type of digital object defined, at the conceptual and logical levels, by essential data elements (e.g., To, From, Subject, or Date).

Another approach enables migration through an object interchange format defined at the conceptual level. This type of approach is being widely adopted for e-commerce and e‑government where participants in a process or activity have their own internal systems that cannot readily interact with systems in other organizations. Rather than trying to make the systems directly interoperable, developers are focusing on the information objects that need to be exchanged to do business or otherwise interact. These objects are formally specified according to essential characteristics at the conceptual level, and those specifications are articulated in logical models. The logical models or schema define interchange formats. To collaborate or interact, the systems on each side of a transaction need to be able to export information in the interchange format and to import objects in this format from other systems. The XML family of standards has emerged as a major vehicle for exchange of digital information between and among different platforms.

This review identified the following data formats:

ADUS of the National ITS Architecture standards for file and data formats are also important for consideration. ADUS is concerned with storing data generated from ITS, integrating it with other data, repackaging it, and making it accessible to a wide variety of stakeholders and applications.

NCHRP Report 754 noted that one of the challenges in developing transportation information best practices is assessing the risk that the file formats chosen to store data and information may become obsolete.(66) A file format is a particular way that information is encoded for storage in a computer file. There are different kinds of formats for different kinds of information. To preserve content in digital form, data custodians must be able to distinguish between format refinements and variants, because those are significant to sustainability, functionality, or quality. However, this may be difficult because new formats are very complex, and there may be no obvious way to determine the format for a file; different formats are employed or favored in different stages of a content item’s lifecycle; and formats are often proprietary and may be limited to the creator’s available software package.

As stated in NCHRP Report 754, two types of factors come into play in choosing file formats for long-term needs: sustainability factors and quality and functionality factors.(66) Quality and functionality factors pertain to the ability of a format to represent the significant characteristics required or expected by current and future users of a given content item. These factors vary for particular genres or forms of expression. For example, significant characteristics of sound are different from those for still pictures, whether digital or not, and not all digital formats for images are appropriate for all genres of still pictures. The following seven factors influence the feasibility and cost of preserving content:(57)

A recent white paper prepared for FHWA noted that data resolution needs—both temporal and spatial—for VDA vary according to the application.(68) Table 9 provides an example of how the resolution needs for volumes, speeds, and travel times vary according to the intended application.

| Data Type | Resolution | ||||||

|---|---|---|---|---|---|---|---|

| Temporal Application | Spatial Application | ||||||

| Connected Vehicle | Simulation | RTP | HPMS | Connected Vehicle | Simulation | RTP | |

| Volumes | Very High—subsecond | High—5min | Medium—1 h | Low—24 h | Link | Link | Link and areawide |

| Speeds | Medium—1h | Link | Link | Link and areawide | |||

| Travel Times | — | Link and corridor | Link and corridor | Link and areawide | |||

| — Indicates the data type is not applicable to the application. | |||||||

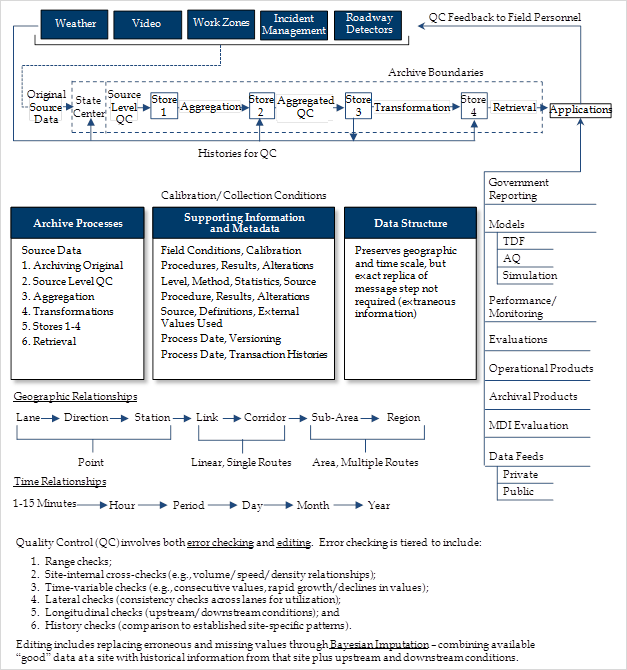

The VDA white paper also describes the differences between active versus passive archiving, with the main difference being that active archiving involves a high level of data management methods and protocols.(68) Figure 32 (taken from the white paper) shows an example of active archiving. Passive archiving is less formal and usually just means that the data are stored with minimal processing. Cost and assigning responsibility (who will be the archivist?) is the major determining factor. For VDA, the main question is whether technology has progressed to the point that active management is no longer necessary on a full-time basis—can it be done virtually as the need arises?

©Cambridge Systematics, Inc.

Figure 32. Diagram. Actively managed VDA Framework, which involves several processes to make data fully usable.(68)

Most of the key issues to be addressed are similar to those faced by the ADUS of the National ITS Architecture. ADUS is concerned with storing data generated from ITS, integrating it with other data, repackaging it, and making it accessible to a wide variety of stakeholders and applications. FHWA-sponsored work led to the development of the following three ASTM standards that are directly relevant for VDA:

Another excellent source is General Requirements for an Archived Data Management System, a report prepared by Cambridge Systematics, Inc., for FDOT District 4 in preparation for the development of a regional ADMS to store, integrate, and access the following types of data:(71)

The requirements cover typesof queries (real-time queries and batch queries); user roles and rights (standard users, advanced users, and system administrators); accessing the system; creating, submitting, and retrieving query results; query and result file management; reports; data storage; data processing; data management and archiving; operational requirements (database, application, and networking environment); and nonfunctional requirements (look and feel, usability, documentation and online help, performance, portability, and security).

It is near certainty that various changes will be made to the calculation procedures related to data integration once reports have been publicly released, and the VDA white paper discusses the need for version control during the development of a performance monitoring program.(68) For example, consider the impacts that might occur when an improved travel time estimation algorithm is developed after several years of performance reports. The improved algorithm is more accurate, but it also estimates travel times that are consistently shorter. However, several years of performance reports have already been published that use the old algorithm, which produces longer travel times. Instead of simply overwriting the old statistics, they should be retained in the data management system as a previous version that has expired. The new statistics based on the improved algorithm would then be stored as the current version.

The VDA white paper also provides guidance in data transformation, which is the act of changing data and is quite common in archiving real-time traffic data in a data archive.(68) As collected, real-time traffic data are highly detailed and are stored in a database designed to allow quick access to current conditions. However, a data archive typically does not retain the original level of data, and the archive database must be designed for quick access to a wide range of historical conditions. Transformation can be as simple as aggregating data over time and space or as complicated as creating new metrics (e.g., travel-time based performance metrics from detector measurements). In all cases, alternative data processing procedures and assumptions can be used to arrive at the same result—the differences in these can lead to inconsistency in the final values. For example, the choice of free-flow speed or the length of a peak period will affect the final transformations. Again, the issue is whether agencies should be free to use whatever methods they choose (with adequate metadata documentation) or whether a standard set of procedures needs to be fostered.

All the examples, standards, and best practices described in this chapter will all be considered in the design and development of the VDA Framework.