U.S. Department of Transportation

Federal Highway Administration

1200 New Jersey Avenue, SE

Washington, DC 20590

202-366-4000

Federal Highway Administration Research and Technology

Coordinating, Developing, and Delivering Highway Transportation Innovations

| REPORT |

| This report is an archived publication and may contain dated technical, contact, and link information |

|

| Publication Number: FHWA-HRT-12-054 Date: December 2012 |

Publication Number: FHWA-HRT-12-054 Date: December 2012 |

This section discusses the following:

Automatic measurement methodologies are based on the use of traffic detectors at selected locations on the roadway or on probe technologies (i.e., tracking vehicles on the roadway).

6.1.1 Point Detection and Generation of Traffic Data

Many agencies currently have the capability to provide evaluations. Table 22 describes the data collection characteristics for several agencies. These data are initially generally aggregated to 5-min periods before they are processed further for evaluation studies.

These systems are generally based on the measurement of traffic parameters at specific locations on the roadway and have historically relied on inductive loop detectors spaced at average distances of one-third to two-thirds of a mile. They provide volume and occupancy and, in some cases, speed data to the TMC at intervals ranging from 20 s to 1 min. If speed is not provided by the detectors themselves (a loop trap is required in order to sense speed), then speed is estimated at the TMC. A loop trap consists of two closely spaced loop detectors. The travel time between presence indications is a measure of speed. Recently, other types of point detectors such as radar detectors have been used with increasing frequency.

When loop traps are not available, speed may be estimated at the TMC from loop detector occupancy and volume measurements. A relationship employed by WSDOT is provided in figure 55.(17)

![]()

Figure 55. Equation. Estimated speed.

Where:

v = Estimated speed.

g = Factor that incorporates vehicle

length and loop detector length.

o = Percentage occupancy.

q = Volume in vehicles

per hour.

The Caltrans PeMS system accomplishes this function by using a continuously computed g factor.(6) Table 22 provides basic data generation for representative performance monitoring systems.

Table 22. Basic data generation systems.

| System | Reference | Principal Data Source | Volume | Occupancy | Speed | Basic Spatial Definition | Short Period Time Data Organization | Notes |

|---|---|---|---|---|---|---|---|---|

| PeMS | 5 | Single loop detectors in each lane reported every 20 s; spacing approximately 0.5 mi | From loop detectors | From loop detectors | Computed from volume and occupancy by developing g factor in real time for each lane | Segment-region between detector stations | 5 min | Statewide system that

collects data from individual TMCs |

| Florida STEWARD System | 40 | Example installation uses remote

traffic microwave sensor (RTMS) radar detectors at approximately 0.25- to 0.5-mi spacing; data reported every 20 s |

From RTMS detectors | From RTMS detectors | Segment-region between detector stations | 5, 15, and 60 min |

Statewide system that

collects data from individual TMCs |

|

| Minnesota | 36 | Single loop detectors in each lane reported every 20 s; spacing approximately 0.5 mi | From loop detectors | From loop detectors | Computed from volume and occupancy assuming an average effective vehicle length (vehicle length plus loop length) of 22 ft | Segment-region between detector stations | 5 min | |

| Oregon PORTAL Archived Data User Service | 41 | Loop traps in each lane reporting data every 20 s | From loop detectors | From loop detectors | From loop detectors | Segment-region halfway between detector stations | 5 min, 1-min data recoverable from 20-s data |

|

| Washington State | 17 | Single loop detectors in each lane reported every 20 s; spacing approximately 0.5 mi |

From loop detectors | From loop detectors | Computed from volume and occupancy by use of g factor | Segments defined by analyst reviewing spaces between detector locations | 1 and 5 min |

Where loop detector traps are employed, vehicle length and speed may also be obtained, providing the potential to classify vehicles by length.

In recent years, point detectors are more common than inductive loop detectors. The most commonly used technologies include frequency modulated continuous wave, microwave radar detectors, passive acoustic detectors, and video processor-based detectors. While these technologies may offer advantages in terms of installation and maintenance cost as well as the ease of conveying data to a communications node point, they are generally considered to be less accurate than inductive loop detectors. Examples of these technologies and errors as reported by Hagemann are shown in table 23 .(42) The errors often depend on the manufacturer's specific model, the type of mounting used, and the type of roadway environment. Weather may also affect performance. Supporting structures for these detectors are often located beyond the roadway shoulder.

Table 23. Error rate of different surveillance technologies in field tests.

| Technology | Technology | Mounting | Count Error (Percent) | Speed Error (Percent) |

|---|---|---|---|---|

| Inductive loop | Pavement saw-cut | 0.1-3 | 1.2-3.3 | |

| Pneumatic road tube | Pavement | 0.92-30 | ||

| Microwave radar | WHELEN® TDN 30 | Overhead | 2.5-13.8 | 1 |

| EIS® Remote Traffic Microwafe Sensor | Overhead | 2 | 7.9 | |

| Active infrared | Schwartz Electro-Optics Inc. Autosense II® | Overhead | 0.7 | 5.8 |

| Passive infrared | ASIM Technology Ltd IR 254 sensor | Overhead | 10 | 10.8 |

| Video image processing | Econolite Control Products Inc. Autoscope Solo® | Side-fire | 5 | 8 |

| Econolite Control Products Inc. Autoscope Solo® | Overhead | 5 | 2.5-7 | |

| Ultrasonic | Novax Industries Corp. Lane King® | Overhead | 1.2 | |

| Passive acoustic | SmarTek Systems, Inc. SAS-1 | Side-fire | 8-16 | 4.8-6.3 |

| Wireless sensor networks | Sensys Networks Inc. VSN240 | Pavement | 1-3 |

Note: Blank cells indicate no data were provided.

6.1.2 Detector Station Location

During the design of a project, locations for point detector stations are often selected based on criteria such as ramp metering requirements or requirements to develop traveler information. Detector station locations based on these criteria may not satisfy the requirements for evaluation measures. It should be noted that, as a minimum, volume and speed (obtained directly or inferred from other data) are required for each travel link (mainline section between ramp entry and/or exit locations as shown in figure 3 ) in order to compute system delay measures, fuel consumption, throughput, and emissions. For benefit evaluation purposes, the addition of supplementary detector stations may, in some cases, be required to fill these gaps.

6.1.3 Traffic Data Screening and Data Imputation

Traffic management systems collect data from detectors for a wide variety of purposes. These systems generally include quality control techniques to validate the data and to synthesize missing data if the missing data would otherwise prevent the implementation of these functions. These techniques are briefly discussed in the following sections.

Data Screening

Most of the FMSs that are commonly used for performance evaluation purposes have the capability to screen the collected data for accuracy and, in some cases, synthesize data where screening has shown them to be missing or incorrect. The following discussion describes techniques that are used to perform these functions.

Smith and Venkatanarayana divide data screening tests into the following categories:(43)

Turner et al. provide the following thresholds for acceptable data for thresholds on a single variable:(15)

6.1.3.1 Data Imputation

Imputation is the process of filling in the gaps that occur from missing data due to equipment, software, or communication failures.(9) A number of techniques including simple historic averages, regression models, expectation maximization, and interpolations have been employed.

TMC performance evaluation requirements depend on the purpose

and objectives of the evaluation as well as the quality of the data collection

equipment and software available. Errors for measured traffic data variables,

such as volume, speed, and occupancy, may be classified

as follows:

It is recommended that agencies that are planning to conduct a benefits evaluation program prepare a detailed plan for implementing each measure selected. This plan should include accuracy objectives, traffic variable error estimates, geographical coverage areas, and sample size requirements.

6.3.1 Probe-Based Technologies

In recent years, probe data have become increasingly popular for obtaining speed and travel time information. In order to provide estimates for the system oriented measures described in section 3 of this report, volume information is required.

The following probe technologies have been used for ITS applications:

Table 24. I-95 corridor coalition probe detection test

| Speed Bin | Requirement Absolute Average Speed Error < 10 mi/h | Requirement Speed Error Bias < 5 mi/h | Hours of Data Collection | Percent of Total Data |

|---|---|---|---|---|

| 0-30 mi/h | 5.3 | 2.7 | 800.5 | 3.4 |

| 30-45 mi/h | 6.3 | 2.1 | 777.5 | 3.3 |

| 45-60 mi/h | 2.4 | 0.0 | 4,625.0 | 19.4 |

| > 60 mi/h | 2.6 | -2.3 | 17,566.2 | 73.9 |

| All speeds | 2.8 | -1.5 | 23,769.2 | 100 |

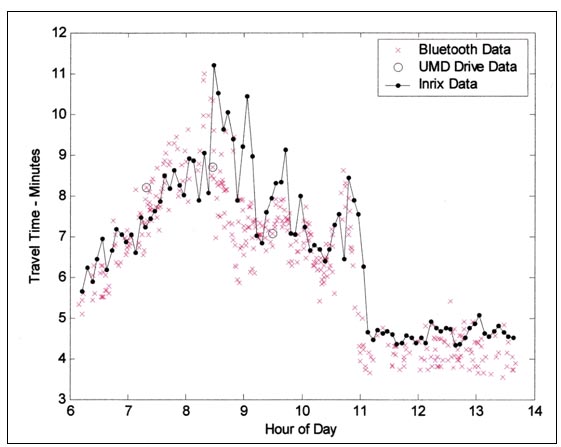

Figure 56. Graph. Comparison of INRIX® data with Bluetooth® data and measured travel time.

6.3.2 Use of Probes for Benefits Evaluation

At the time this report was written, it appears that probe information developed by service providers, Bluetooth® probe readers, and toll tag readers have the potential to provide information to develop travel time-related measures (measures Q.1 and Q.2 in table 6). As with point detection, a well-designed evaluation program is required to assure that the results are consistent with the objectives of the evaluation.

To obtain data for the system-based measures (measures D, F, T, and E in table 6 ), this information must be supplemented by volume information for each mainline link. Where ITS is not sufficiently equipped with point detectors to meet this requirement but are equipped with closed circuit television camera coverage for these links, it may be possible to use video processor detectors located at the TMC to develop this information. During evaluation periods, the field of view for these cameras cannot be changed. As a result, it will be possible to develop only a limited dataset for this situation.

As indicated in section 5.1.4 in this report, signal timing evaluation is traditionally performed using manual techniques. Specifically, intersection delay is measured by manual observation of queues, and travel time is obtained by floating vehicle techniques. Evaluations of this type are often conducted in conjunction with a signal retiming project. Because of the number of observations and floating vehicle runs required to obtain statistically significant data for different time periods, these evaluations may be expensive if conducted frequently.

In recent years, there has been considerable interest in researching automatic data collection and reduction processes to obtain intersection delay data. The following techniques have been described:

Figure 57. Flowchart. The SMART-SIGNAL system architecture.

The National ITS Architecture provides general guidelines regarding archived data user services. The development of standards was assigned to ASTM subcommittee E17.54. The following relevant standards have been developed:

The functions of the evaluation vary with the time phase of the project. When the project becomes operational, the initial evaluations often center on the benefits achieved by the project in a before-after context. As time progresses, interest becomes more focused on the year-to-year benefit changes achieved by improvements to TMC operations as well as demand changes. Table 25 identifies general approaches that may be employed as the evaluation emphasis changes.

Table 25. Evaluation approaches.

| Evaluation Objective | Project Phase | Possible Evaluation Approach |

|---|---|---|

| Continuous year-to-year evaluation | Project operational | Use methodologies as described in this report. Consider adding supplementary surveillance to correct deficiencies in providing automated data. |

| Before-after evaluation followed by year-to-year evaluation | Project complete or under construction, but no before data available | Use methodologies described in this report for after data. Evaluate after conditions using a simulation model and calibrate the simulation to the field results. Use calibrated simulation to evaluate before conditions. |

| Before-after evaluation followed by year-to-year evaluation | Project in design or design has not yet started | Concurrently develop evaluation plan and provide field devices for data collection consistent with methodologies described in this report. After implementation is complete, using the project's field devices, collect data for a period of time. This will serve as before data. Subsequently initiate ITS operation and collect after data. |

The following steps are required to implement the benefits evaluation process described in this report: