U.S. Department of Transportation

Federal Highway Administration

1200 New Jersey Avenue, SE

Washington, DC 20590

202-366-4000

Federal Highway Administration Research and Technology

Coordinating, Developing, and Delivering Highway Transportation Innovations

| TECHNICAL REPORT |

| This report is an archived publication and may contain dated technical, contact, and link information |

|

| Publication Number: FHWA-HRT-17-036 Date: March 2018 |

Publication Number: FHWA-HRT-17-036 Date: March 2018 |

This evaluation sought to understand the effects of FHWA R&T activities on the implementation of the Eco-Logical approach to transportation project delivery by State transportation departments and MPOs. This chapter includes a logic model to describe the Eco-Logical Program, the evaluation hypothesis and questions, and the methodology used to collect and analyze data to answer the evaluation questions.

The evaluation sought to determine how effective FHWA’s efforts were in disseminating information to facilitate adoption, determine to what extent stakeholders have adopted the Eco-Logical approach, and identify business and environmental impacts obtained by agencies implementing the Eco-Logical approach.

A logic model is defined by the authors of this report as a series of statements linking program components (i.e., inputs, activities, outputs, outcomes, and impacts) in a causal chain. It describes the relationship between program resources, planned activities, and expected results. It is not intended to be a comprehensive or linear description of all program processes and activities but rather to make explicit how program stakeholders expect program activities to affect change. The logic model helps to explain the theories of change that drive the design of a program that can be tested in an evaluation (see figure 1).

The inputs, activities, and outputs in the logic model are described in table 1 in chapter 1. Several of the activities and outputs involved FHWA in collaboration with other agencies and organizations.

FHWA stakeholders, including State transportation departments and MPOs, may choose to adopt any or all nine steps of the Eco-Logical approach (see subsequent section, State Transportation Department/MPO Outcomes, for a description of the steps). These nine implementation steps are activities that agencies can pursue to realize the outcomes and impacts described in the following two sections.

The Eco-Logical Program provides strategies to help agencies meet transportation infrastructure needs without compromising the environment. The evaluation team identified three outcomes for the Eco-Logical Program and related them to the nine-step process for implementing the Eco-Logical approach. The outcomes and their accompanying steps are as follows:[2]

The first outcome describes relationships with partners. The second and third outcomes combine the five types of mitigation into two groups. The Council on Environmental Quality regulations (40 CFR 1508.20) defines mitigation as follows:(44)

The second outcome includes the two types of mitigation that can be accomplished in planning, and the third outcome includes the remaining three types of mitigation, which tend to occur in project development.

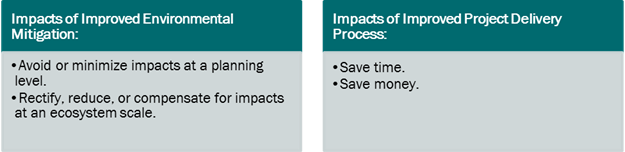

The Eco-Logical Program is intended to achieve two overarching impacts: (1) improved environmental mitigation and (2) improved project delivery processes (see figure 2). The Eco-Logical Program encourages early identification and consideration of mitigation opportunities at the planning level (i.e., to identify and consider issues earlier) and at an ecosystem scale (i.e., to consider effects in a broader geographic context than at the project level).

Source: FHWA.

Figure 2. Illustration. Impacts of improved environmental mitigation and project delivery process.(3)

The evaluation team identified the following hypothesis for the program evaluation:

Hypothesis: The Eco-Logical Program and approach have contributed to improved project delivery processes and environmental mitigation.

Table 2 lists the key questions that link the evaluation research to the program logic model. The table also includes measures of effectiveness for each question.

The evaluation team used the following five data collection and analysis methodologies to inform this evaluation:

The following subsections provide additional detail about these five data collection and analysis methodologies.

The evaluation team conducted a literature review to gain an understanding of the Eco-Logical Program, its stakeholders and users, and FHWA outreach activities and outputs. The evaluation team also conducted a qualitative analysis.

Findings from the initial literature review are detailed in table 1 (see chapter 1) and used to answer evaluation question 1, how has FHWA enabled State transportation departments and MPO stakeholders to adopt the Eco-Logical approach?

The evaluation team determined it would be most appropriate to focus the evaluation on the effectiveness of implementation by recipients of FHWA funding through the 2007 Eco-Logical Grants Program and the 2013 SHRP2 IAP rather than attempt to identify and assess general awareness of the program and approach by hundreds of agencies around the United States.

A more extensive document review was used to provide detailed information for the qualitative coding analysis and as well as for the analysis of the Eco-Logical approach’s steps completed by recipients of FHWA funding. Documents reviewed included the Eco-Logical Program’s annual reports from 2008 to 2015 as well as notes from interviews that were conducted with recipients of FHWA funding that informed those annual reports. (See references 13, 15, 19, 25, 34, and 45.)

Appendix A includes a list of recipients and their project descriptions, appendix B shows interviewee data available from 2008 to 2016, appendix C provides lists of interview questions from each Eco-Logical annual report from 2008 to 2015, and appendix D includes questions from a program-sponsored (FHWA and AASHTO) 2014 questionnaire conducted for the 2007 grantee recipients.(25)

On October 14–15, 2015, FHWA and AASHTO jointly held an Implementing Eco-Logical IAP Peer Exchange for SHRP2 IAP recipient agencies to share accomplishments and lessons learned from their projects with each other and with other transportation and resource agencies.(42) Participants included the following (asterisks denote IAP funding recipients):

A member of the evaluation team participated in the peer exchange to collect data from attendees through targeted listening sessions and general observations. Findings from the peer exchange helped the evaluation team to determine next steps for data collection, including conducting stakeholder interviews. Appendix E lists questions asked by the evaluation team to peer exchange participants and a summary of their responses.

In 2016, the evaluation team interviewed recipients from both the 2007 Eco-Logical Grant Program and 2013 SHRP2 IAP. The purpose was to follow up on program-sponsored interviews and to ask targeted questions to inform the evaluation hypothesis and questions. The respondents included 10 MPOs, 6 State transportation departments, and 4 recipients categorized as “other” (see table 14 for a list of all interviewees). All respondents spoke with the evaluation team via a conference call except for one agency, which sent in written responses to the interview questions. Appendix F provides a list of 2016 evaluation interview questions.

To address the intricacies in R&T evaluation, the evaluation team interviewed many stakeholders. The team ensured all interviewees that their identities were to remain confidential to achieve more unbiased answers to questions that were asked. Throughout the document, when interviewees are quoted, the month and year of interview are noted as well as the name of the interviewer, but the interviewee names have been redacted. However, to maintain continuity and comparability between interviewee responses, a generic title is attributed to each interviewee. The aforementioned information is placed in a footnote for each interview.

The evaluation team also collected input from FHWA Eco-Logical Program’s points of contact through interviews and participation in a program visioning session to understand FHWA’s perspective on benefits and challenges, FHWA’s role in encouraging stakeholder implementation, and FHWA’s goals for the program’s past, present, and future.

Based on a review of program annual reports and follow-up evaluation interviews, the evaluation team determined which Eco-Logical approach steps recipients completed, both during and following their periods of performance. (See references 13, 15, 19, 25, 34, and 45.) The program has generally not tracked recipient implementation of the Eco-Logical approach by the nine-step approach except for one annual report.(45)

The purpose of this analysis was to gain insight into the areas where agencies tend to focus their efforts in order to inform evaluation question 2, how are State transportation departments and MPO stakeholders incorporating the Eco-Logical approach into their business practices? Section 3.2 in chapter 3 of this report provides results of the steps analysis. The analysis also helped to identify potential areas where the program may choose to focus attention in the future, as described in chapter 4 of this report. It should be noted that each agency did not necessarily have the goal of completing all nine Eco-Logical steps, and the evaluation did not seek to appraise recipients based on the number of steps they completed.

The evaluation team developed a database of stakeholder-identified benefits, challenges, and recommendations of the Eco-Logical Program. Information was gathered from interview notes that informed program annual reports from 2008 to 2015, responses from an Eco-Logical Grant Program questionnaire conducted by the program in 2014, input from the program-sponsored IAP peer exchange held in 2015, and interview notes from follow-up interviews conducted by the evaluation team in 2016. (See references 13, 15, 19, 25, 34, and 45.) Relevant statement(s) directly from interview notes were copied into the database with information on the data source, including agency, agency type, and year.[3] Each statement was then coded into a benefit, challenge, recommendation to FHWA, or recommendation to peer agencies. Each statement was also coded into a theme and category (or sub-theme). Coding the data makes it easier to search, make comparisons, and identify patterns that require further investigation.

Each theme and category was associated with an evaluation question, as provided in table 3. Themes and their associated categories are defined following the table. See appendix I for the number of comments assigned to the qualitative coding themes and categories.

| Theme | Category | Evaluation Question |

|---|---|---|

| Communication | Knowledge | 1 |

| Communication | Outreach | 1 |

| Resources | Funding | 1 and 2[4] |

| Resources | Eco-Logical Grant Program or IAP | 1 |

| Resources | Staff (includes staff time) | 2 |

| Relationships | Credence | 1 |

| Relationships | Data sharing | 2 |

| Relationships | External stakeholders (includes buy-in) | 2 |

| Relationships | Internal stakeholders (includes buy-in) | 2 |

| Operations | Champion/leader | 2 |

| Operations | Data use | 2 |

| Operations | Environmental impacts | 3 |

| Operations | External factors | 2 |

| Operations | Process or process change | 2 |

| Operations | Process impacts | 3 |

| Operations | Staff turnover | 2 |

Communication

The communication theme includes benefits, challenges, and recommendations related to the interchange or transmission of information about the Eco-Logical approach. Categories within this theme include the following:

Resources

The resources theme includes benefits, challenges, and recommendations related to the critical assets for the Eco-Logical approach to function effectively in agencies. Categories within this theme include the following:

Relationships

The relationships theme includes benefits, challenges, and recommendations related to making connections between recipients and their stakeholders. Categories within this theme include the following:

Operations

The operations theme includes benefits, challenges, and recommendations related to the essential assets, functions, and considerations required to managing an organization or program effectively. Categories within this theme include the following:

This section describes considerations the evaluation team identified in the evaluation plan developed internally for this report that could impact data collection and analysis. The evaluation team identified several techniques to address each challenge, as described in table 4.

[2] While step 9 appears under outcome 2, it is not out of order. The outcomes simply group related steps.

[3] The evaluation team sought to remove duplicate statements where, for example, the same recipient repeated the same benefit during the same interview. A single occurrence of a statement is one agency (including one or more individuals) on an interview call in 1 yr within an evaluation theme-category combination.

[4] The evaluation team recognized that comments related to funding could describe either evaluation question 1 (how FHWA enabled agencies) or question 2 (factors that supported or were challenges to agencies’ implementation of the Eco-Logical approach).