U.S. Department of Transportation

Federal Highway Administration

1200 New Jersey Avenue, SE

Washington, DC 20590

202-366-4000

Federal Highway Administration Research and Technology

Coordinating, Developing, and Delivering Highway Transportation Innovations

| REPORT |

| This report is an archived publication and may contain dated technical, contact, and link information |

|

| Publication Number: FHWA-HRT-15-047 Date: August 2015 |

Publication Number: FHWA-HRT-15-047 Date: August 2015 |

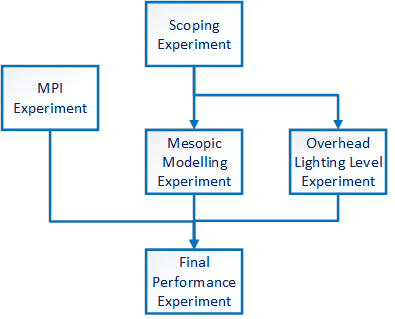

Five experiments were undertaken as part of this project. The progression of the experiments is shown in figure 22. Later experiments were built from two basic efforts undertaken in the first two experiments, a scoping experiment and an MPI experiment. Those two experiments were designed to explore the range of the possible experiments’ conditions and to develop the boundaries of the future efforts. The two subsequent experiments, the overhead-lighting level and mesopic modeling experiments, were performed to investigate other facets of spectral interactions. A final performance experiment investigated the results of the first four experiments in a full driving environment. Each of the experiments is summarized in the following paragraphs.

Figure 22. Flowchart. Relationship among experiments performed.

The scoping experiment compared the detection and recognition distances of pedestrians and small targets under two different overhead-lighting sources, two overhead-lighting levels, twoheadlamp colors, and two headlamp levels. The objects detected and recognized were four different colors and were located both along the roadway and in the periphery of the roadway. The purpose of this experiment was to provide an initial review of the interactions of light source spectrum and level and to identify the specific characteristics of the lighting conditions to be further investigated.

The MPI system performance experiment investigated the effect of using a momentary peripheral illuminator on object visibility. The goal of this experiment was to investigate whether the possible spectral improvement in the periphery could be enhanced by the light source type. The experiment considered the MPI system under two headlamp light source characteristics, twoheadlamp light levels, three different MPI behaviors, and two different ambient lighting conditions. The results of this experiment were used to identify the critical components of the MPI system, the impact of the light source spectrum on the MPI performance and to identify variables to be carried forward to the final performance experiment.

The overhead-lighting level experiment was designed to investigate the interaction between overhead lighting and vehicle headlamps on visibility. The spectral effects of a light source depend on the adaptation level of the human eye, and headlamps may be the dominant aspect in a driver’s field of view; thus, headlamps may be the main contributor to adaptation level. This experiment was designed to investigate this aspect of the lighted area by looking at the detection and recognition of both small objects and pedestrians in a carefully controlled manner by dimming the overhead-lighting system from 100 percent to off, in 10-percent increments.

The mesopic modeling experiment was designed to provide a real-world validation of the CIE Recommended Model for Mesopic Photometry.(1) Because most of the previous work performed for mesopic models was done in highly controlled environments, even for the experiment that included driving, external validation of the model was required.(42) The experiment implemented here used both a static and a dynamic approach to the detection of objects in the roadway. These results were then compared with the expected results of the mesopic models.

The final performance experiment combined all of the efforts of the previous experiments to perform an overall assessment of the spectral aspects of the overhead lighting. In all, three overhead-lighting systems, off-axis pedestrians, five different adaptation luminance levels, different vehicle speeds, and three pedestrian clothing colors were used to explore the spectral effects of the overhead-lighting system. This experiment was a true driving experiment, used to assess the overall applicability of spectral aspects of the light source in a realistic scenario.

There were common elements to each of the experiments undertaken as part of the project. In particular, each experiment was performed on the Virginia Smart Road (hereafter referred to as the Smart Road) using the same overhead-lighting systems and vehicle headlamp systems. Similar objects for detection were also used in all of the experiments. Common components and aspects of the experiments are described in this chapter. Experimental methods unique to each experiment are described in the chapters on those experiments.

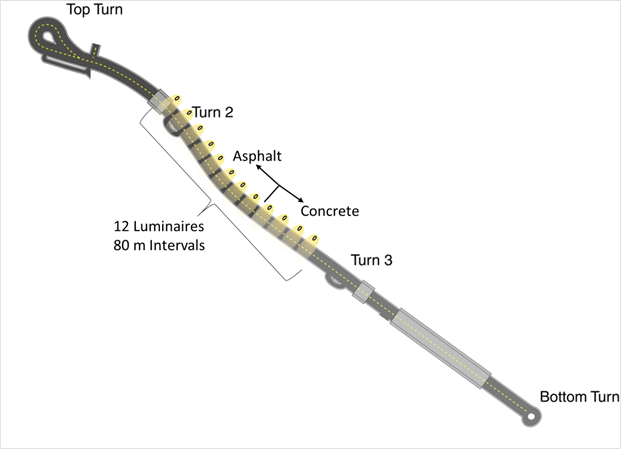

The research was conducted at the Virginia Tech Transportation Institute’s (VTTI) Smart Road. The Smart Road is a 3.54-km (2.2-mi) restricted-access test track with guard rails, pavement markings, and a variety of overhead-lighting installations, as shown in figure 23. The Smart Road has two pavement surfaces—asphalt and concrete, and overhead-lighting levels can be adjusted to match luminance levels between lighting types, if required.

During the experiments, participant drivers completed a number of laps on the Smart Road, turning their vehicles around at the top turn, the bottom turn, or turn three, depending on which protocol they were following.

Figure 23. Diagram. Smart Road test track.

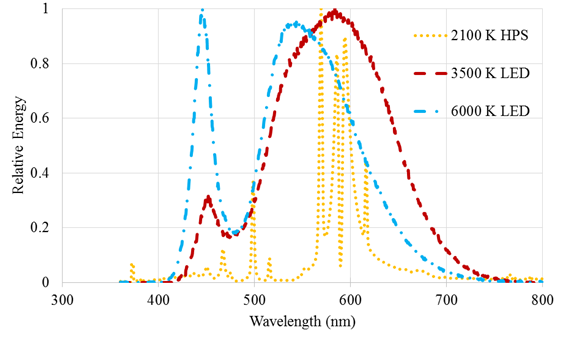

For this project, three lighting systems installed on the Smart Road were used. One was a traditional HPS system, and two were solid-state LED systems. All luminaires were dimmable, mounted 15 m (49 ft) high, and spaced 80 m (262 ft) apart. The characteristics of the luminaires used for this project are listed in table 2, and their SPDs are shown in figure 24.

Table 2. Overhead-lighting system characteristics.

Lighting System |

Wattage |

Color Temperature (K) |

Luminaire Type |

Driver |

| 2,100-K HPS | 150 W |

2,100 |

General Electric model M2AC-15S4-A1-GMC2-1253, Type II M | Greentek 0- to 10-V dimming |

| 3,500-K LED | 234 W |

3,500 |

Cree® LEDway® BetaLED® model STRLWY, Type II M. 3500 | Advance 0- to 10-V dimming |

| 6,000-K LED | 234 W |

6,000 |

Cree® LEDway® BetaLED® model STR-LWY, Type II M. 6000 | Advance 0- to 10-V dimming |

Figure 24. Graph. Mesopic modeling experiment—SPDs of overhead-lighting types used in the study.

Each of the lighting systems was equipped with a wireless control system to provide dimming and on/off control for the luminaires. The control system is a Synapse® Wireless, Inc., SNAP DIM-10 0- to10-V system that provides simultaneous 255-step remote-dimming control for all 3luminaire systems.

The overhead-lighting systems were calibrated using the VTTI-developed Roadway Lighting Mobile Measurement System (RLMMS).(23) This allowed each system to be dimmed accurately and allowed lighting level characteristics to be matched between lighting systems depending on the conditions required for the experiments.

Two mid-sized sport-utility vehicles with the same body style and internal layout were used in the study. Both were instrumented with digital audio and video recorders, luminance cameras (described under Luminance-Based Systems in the Procedures section of this chapter), small monitors, and keyboards. The data collected included driving distance, vehicle speed, Global Positioning System (GPS) location, and the user-input button used to calculate detection and color recognition distances. During the experiments, the rear view and side mirrors were covered to prevent headlamp glare from the other test vehicle.

HID headlamps were installed on the test vehicles. The headlamps selected were Hella™ 90-mm Bi-Xenon projector lamps, rated at 35 W, that use an internal shutter to provide an SAE beam pattern with a sharp vertical cutoff. A single 1-F capacitor stabilized headlamp input voltage on each vehicle. Low beams only were used. Before each night of experiments, the headlamps were aligned according to manufacturer specifications using a Hopkins® Manufacturing Hoppy Vision 100 laser aiming system.

Gels were placed in front of the headlamps and were mounted so they could be easily changed, as shown in figure 25.

Figure 25. Photo. Test vehicle with headlamps and interchangeable gels.

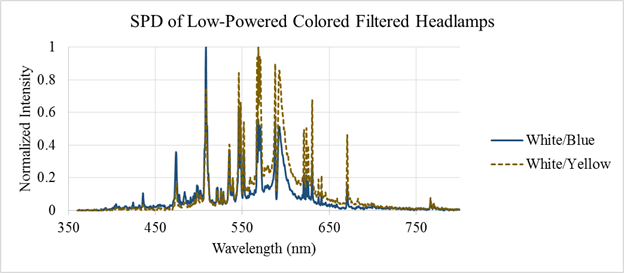

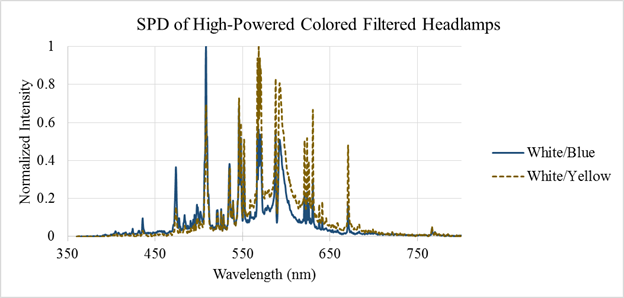

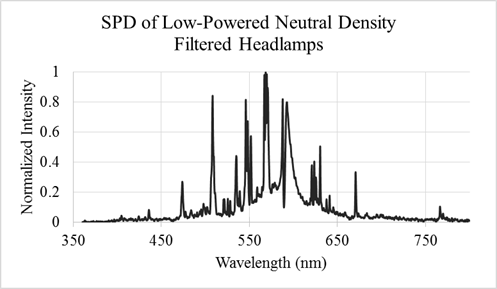

Depending on the experiment, one of five sets of filters was used: white/yellow high intensity, white/yellow low intensity, white/blue high intensity, white/blue low intensity, and neutral density low intensity. Recommendations for the headlamp configurations were provided by the University of Michigan Transportation Research Institute. The headlamp and gel intensity and spectral characteristics are listed in table 3, and the SPDs of the headlamps using different filters are shown in figure 26 through figure 28. In the first two of these figures, it can be seen that the blue light is truncated by the yellow filter and vice versa.

Table 3. Characteristics of headlamp filters.

Headlamp Color |

Intensity |

Transmittance |

Correlated Color Temperature (K) |

| White/yellow—high | High |

0.49 |

2,926 |

| White/yellow—low | Low |

0.38 |

2,910 |

| White/blue—high | High |

0.44 |

5,357 |

| White/blue—low | Low |

0.31 |

5,120 |

| Neutral density | Low |

0.34 |

3,530 |

Figure 26. Graph. SPD of low-power, color-filtered headlamps.

Figure 27. Graph. SPD of high-power, color-filtered headlamps.

Figure 28. Graph. SPD of low-power, neutral-density-filtered headlamps.

Depending on the experiment, yellow filters were used to create an SPD similar to HPS roadway lighting, and blue filters were used to create an SPD similar to LED headlamps. When the 50‑percent filters were used, the headlamps were about the same intensity as typical HID headlamps installed in vehicles. For the white/yellow headlamp configurations, the CCT was slightly different between the high and low settings, because the team used standard, off-the-shelf filters (gels) to reduce transmittance, and these off-the-shelf gels did not necessarily have precisely the same color properties. The same applied to the white/blue headlamp configurations.

For each of the experiments, a visual object was used to measure visual performance. Dependent variables characterizing visual performance were detection distance, orientation-recognition distance, and color-recognition distance, depending on the experiment. The characteristics, type, size, and color of the visual objects were standardized. The objects were either pedestrians or small targets and are described in the following subsections.

Pedestrians

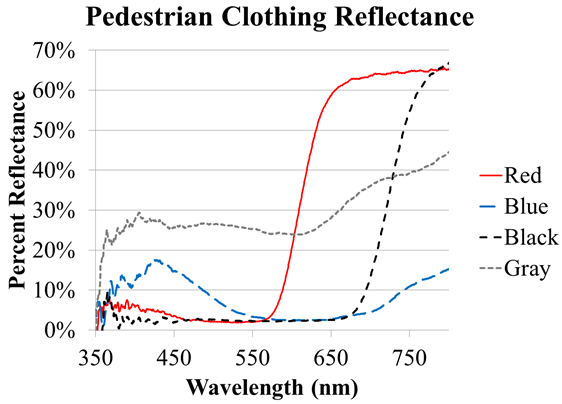

Confederates acted as pedestrians. Pedestrians wore four colors of scrubs, depending on the experiment—red, black, gray, or blue—as shown in figure 29. Pedestrians were not allowed to wear shoes with reflective materials or any other reflective clothing or accessories.

Figure 29. Photo. Clothing colors on confederate pedestrian.

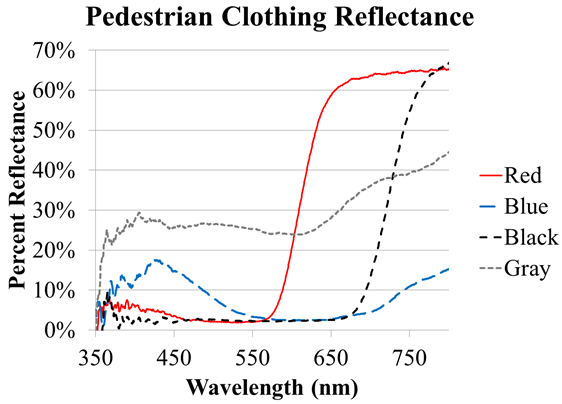

Red was chosen to highlight the amber hue of the HPS overhead lighting and the yellow-filtered headlamps. Blue was chosen because LED luminaires have a blue component and because two headlamp configurations are white/blue. Gray was chosen as a more neutral color, and black was chosen to represent a worst-case, least-visible scenario for a pedestrian at night. The spectral reflectance of the four clothing colors, calculated as a percentage relative to a diffuse white reflector, is shown in figure 30. The integral of the reflectances for the four clothing colors, calculated between 360 and 800 nm using the trapezoid rule and weighted for the eye’s spectral sensitivity, are listed in table 4. These integrals give a direct comparison of how visible these colors would be in ideal conditions in bright light (photopic) and very low light (scotopic).

Figure 30. Graph. Pedestrian clothing reflectance.

Table 4. Integral of pedestrian clothing reflectance.

Clothing Color |

Red |

Blue |

Black |

Gray |

| Integral of reflectance (photopic) | 11.0 |

4.3 |

2.5 |

26.9 |

| Integral of reflectance (scotopic) | 2.7 |

8.1 |

2.4 |

25.3 |

Targets

Participants also identified targets during the experiment. The targets represented roadway obstacles and were 18 by 18 cm (7 by 7 inches)—small and difficult to see but still potentially dangerous to drivers. The targets were two-dimensional to remove the effect that viewing angle has on a three-dimensional object. Their flat faces facilitated the team’s collection of accurate luminance and contrast data, and they were designed to break if a participant ran them over.

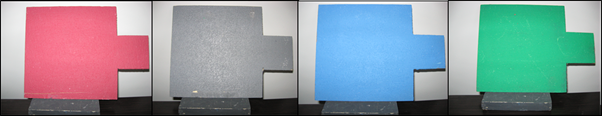

The targets were painted one of four colors: red, gray, blue, or green. The red, blue, and green were chosen because they are additive primaries; the red, blue, and gray were chosen for the same reasons that they were used on the pedestrians’ scrubs. Photos of the targets are shown in figure 31, and the spectral reflectance of the targets is shown in figure 32. The integral of the reflectances for the four target colors, calculated between 360 and 800 nm using the trapezoid rule and weighted for the eye’s spectral sensitivity, are listed in table 5.

Figure 31. Photo. Targets.

Figure 32. Graph. Target reflectance relative to diffuse white.

Table 5. Integral of target reflectance.

Target Color |

Red |

Blue |

Green |

Gray |

| Integral of reflectance (photopic) | 13.0 |

17.3 |

15.4 |

22.3 |

| Integral of reflectance (scotopic) | 8.3 |

27.1 |

15.6 |

21.5 |

Three dependent variables measuring visibility were used in the experiments for this project: detection distance, color-recognition distance, and orientation-recognition distance. The variables used depended on the experimental goals and are described in the following subsections.

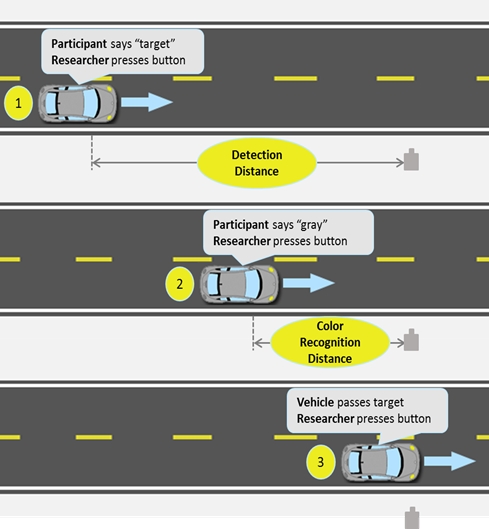

The detection distance was the distance at which a participant was able to detect the presence of an on- or off-axis pedestrian or target. To measure detection distance, researchers instructed participants to say “person” or “target” when they first saw the pedestrian or target. At that moment, an in-vehicle experimenter pressed a button to flag the data. The in-vehicle experimenter also pressed a button to flag the data when the vehicle passed the pedestrian or target. If a participant failed to see an object, it was counted as a miss. Later analysis calculated the distance between these two points to determine the detection distance.

The color-recognition distance was the distance at which a participant was able to accurately recognize the color of an on- or off-axis pedestrian or target. Participants were instructed to call out the color of an on- or off-axis pedestrian’s clothing, or the color of the target, as soon as they saw it. The researcher in the vehicle then pressed a button to flag the data. The in-vehicle experimenter also pressed a button to flag the data when the vehicle passed the pedestrian or target, as stated above, so the distance between where the participant could identify the color and when the vehicle passed the pedestrian or target itself was calculated. That distance was the color-recognition distance. If participants recognized the wrong color, it was recorded as a miss. Figure 33 is a diagram of how detection and color-recognition distances were measured.

Figure 33. Diagram. Measuring detection and color-recognition distances.

Orientation-Recognition Distance

The orientation-recognition distance was the distance at which a participant was able to accurately recognize the direction a pedestrian was facing, or the direction the tab on the target was pointing. Participants were instructed to call out the direction as soon as they were able to discern it. The researcher in the vehicle used button presses to flag the data as was done for the other dependent variables, and the orientation-recognition distances were calculated. If a participant failed to recognize which way an object was facing, it was recorded as a miss.

Participants were selected from the VTTI subject database based on their age and gender to form two gender-balanced groups, older and younger.

Screening criteria for participant selection included the following:

Participants were compensated $30 for every hour they participated in the study, including time spent responding to questionnaires.

Telephone Screening

After participants conforming to the age and gender requirements were identified, a research assistant called them to ask whether they were interested in participating in the study, to gain consent for a screening, and to perform the screening. Those eligible based on the telephone screening were scheduled to come to VTTI for the vision screening.

Vision Screening

When the participant arrived, a researcher obtained consent, had the participant fill out a W9 form and health questionnaire, and administered vision tests: useful field of view (UFOV), brightness acuity, visual acuity, color vision, and contrast sensitivity. Not all vision tests were administered for all experiments. Results for participant vision testing are listed in the chapters describing the individual experiments. The following describes elements of vision screening:

Almost all of the experiments required participants drive on the Smart Road while detecting/ recognizing objects.

In-Vehicle Experimenter Activities

After participants completed the consent and testing portion of the session, in-vehicle experimenters escorted the participants to the test vehicles, where the participants were familiarized with the vehicle controls. They then drove the vehicles to the Smart Road and drove a practice lap, followed by the number of experimental laps required by the experiment. Most experiments used two vehicles. During the experiment, the vehicles were driven through the test section of the road one at a time, pausing at one of the turnarounds while the other vehicle completed its lap, so that the two vehicles would not interfere with each other. Participants completing experimental laps would state whether they saw a target or pedestrian, what color they saw, and which way the object was facing, and the researcher recorded the detections/ recognitions with button presses, as described in the section on dependent variables. The in-vehicle researcher ensured that the participant was driving safely and at the correct speed. For safety reasons, when using confederate pedestrians, after the participant saw the pedestrian, the researcher radioed the pedestrian and asked him or her to move away from the road.

On-Road Experimenter Activities

On-road experimenters acted as confederate pedestrians and positioned themselves and/or the targets alongside the road according to the protocol for that experiment and lap. They were asked to clear the road when instructed by the in-vehicle experimenter, or when a vehicle approached within about 24 m (80 ft). Experimenters also ensured the overhead lighting was set to the correct level and changed headlamp filters as required by the experiment and lap. The test vehicles would only pass through the test portion of the road once the on-road experimenters confirmed the apparatus was in place.

For each of the experiments, photometric analyses were performed to determine the lighting conditions and the visibility conditions during the experiment. Photometric systems were also used to monitor the lighting levels during the experiment and characterize the lighting system’s performance. Four different photometric measurement systems were used during the experiments, two were luminance-based systems and two were illuminance-based systems, and all are described the following subsections.

Both of the two luminance-based photometric systems were imaging systems providing the luminance distribution across a scene.

The first system was a Radiant Imaging® ProMetric® 1600 photometer, a stand-alone commercial test system providing a 1,024- by 1,024-pixel image of a scene. This system can be mounted in the vehicle and provides static luminance measurements from stationary vehicles.

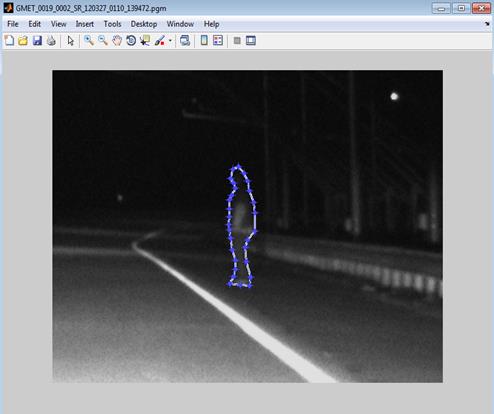

The second system was a calibrated luminance camera recording luminance data regarding the road in front of the vehicle. The luminance cameras in the two vehicles were calibrated by comparing their video to known luminance values.(92) The luminance camera systems were mounted to the windshields, linked with the vehicle instrumentation system, and took dynamic data from moving vehicles. Every time the participant detected a target or pedestrian, luminance data were captured.

The reduction of the data from the two luminance-measurement system was performed in a similar manner. For data from the ProMetric® Radiant Imaging® camera, data were analyzed with ProMetric® software. For data taken with the luminance cameras, VTTI developed data-reduction software was used.(93) In both cases, the analysis software displayed the captured image, allowing the user to select an area of interest, and then calculated the luminance of that area of interest. Multiple areas of interest were selected to calculate luminance contrast. A screenshot of the VTTI program is shown in figure 34.

Figure 34. Screenshot. Luminance analysis software.

The two illuminance-based systems are a handheld Minolta® T10 system and the VTTI RLMMS system. The handheld meter was used to measure the vertical illuminance (VI) and to verify the lighting level on the road during the experiment. The RLMMS is a mobile-based measurement system that allowed for the illuminance characterization of the entire roadway and was used to calibrate the lighting system.

Detection/Recognition Distances

The detection, color-recognition, and orientation-recognition distances for objects were the primary independent variables in almost all of the experiments. These distances were reduced from the data stream provided by the instrumentation system in the vehicle. Typically these distances were calculated based on the in-vehicle experimenter’s button press at the moment of detection or color recognition and a button press at the moment the vehicle passed the visual object. The button presses are accurate to within 100 ms and logged to a data file. Using either technique, the moment of detection/recognition was identified in the data file containing the vehicle’s GPS coordinates, accurate to within 5 cm (2 inches) and recorded using the vehicle data acquisition system. Detection/recognition distances were calculated from the GPS coordinates at the moment of detection and the moment the vehicle passed the object.

In the scoping and MPI system performance experiments, button presses were the main method for identifying the moment of detection/recognition. If an error occurred and the button presses were suspected to be inaccurate, video and audio data, continuously recorded in the vehicles, were used to determine the moment when a participant detected/recognized the object. Once the exact point of detection was recorded, the time stamp associated with the detection was matched to a GPS location recorded by the vehicle’s radar unit. The distance from the point of detection to the target or pedestrian was calculated.

In the other three experiments, the overhead-lighting level, mesopic modeling, and the final performance experiments, all moments of detection/recognition were verified by examining video data, not by relying on button presses alone. Video verification is more precise because it removes any delay caused by an experimenter’s reaction time. This method was used for these three experiments because the differences sought were small and accuracy was paramount.

If the experiment used the luminance camera, the video from the moment when the participant detected or recognized the color of a pedestrian’s clothing or a target was correlated with a screen capture from the luminance camera. Data reductionists then selected regions of interest in the image from which luminance and contrast values were calculated.