U.S. Department of Transportation

Federal Highway Administration

1200 New Jersey Avenue, SE

Washington, DC 20590

202-366-4000

Federal Highway Administration Research and Technology

Coordinating, Developing, and Delivering Highway Transportation Innovations

| REPORT |

| This report is an archived publication and may contain dated technical, contact, and link information |

|

| Publication Number: FHWA-HRT-15-049 Date: April 2015 |

Publication Number: FHWA-HRT-15-049 Date: April 2015 |

© American Concrete Pavement Association, www.acpa.org LTPP data requirements are based on achievement of the program’s goal and objectives and, as a result, include literally thousands of measurement concepts. |

The many quality control/quality assurance processes described in this chapter keep the focus of the people involved in the LTPP program on the quality of the work they do. Achieving a high level of data quality is a program expectation. This expectation created the environment that enabled development of cutting-edge quality control processes more than 10 years in advance of accepted industry standards and will enable continued improvements in quality control processes.

Since the program’s start, data quality has been a prime concern in the development and operation of LTPP activities. High-quality data have been critical to the program’s success. Given the program’s data requirements—a large number of data elements of varying complexity—as well as the available data acquisition and source options, achieving high-quality data is not only a major challenge, but also one that requires significant resources.

LTPP data requirements are based on achievement of the program’s goal and objectives and, as a result, include literally thousands of measurement concepts. During the program planning process, experts in each associated engineering discipline participated in developing the data requirements, which were then reviewed and critiqued by other stakeholders. Like the data requirements, the methods used to acquire LTPP data were developed by expert staff working in concert with program stakeholders and other authorities. A comprehensive planning process was used to examine and evaluate data acquisition methods that took into account budget, complexity, and time considerations.

Due to the complexity of many LTPP data elements, an early program decision was made to select qualified regional support contractors who had experience in operating technical data collection equipment and working with highway agencies to collect data. Increased planning was needed for data collection that requires special equipment, such as the falling weight deflectometers (FWDs) used in nondestructive deflection testing and the profilers used to collect profile data. These early program planning efforts to establish data requirements, acquisition methods, and sources provided a solid foundation for achieving high-quality data, but they were just the beginning of the LTPP quality initiatives.

Data quality control/quality assurance (QC/QA) processes have been developed, updated, and implemented throughout the LTPP program. The processes fall into several categories:

These processes are detailed over the remainder of this chapter. In addition, products that emerged from LTPP QC/QA development and implementation efforts are summarized in chapter 10. The various documents referenced in this chapter—directives, guidelines, manuals, and studies—are available at LTPP’s InfoPave™ Web site.

| Key Milestones in Data Quality Efforts | |

|---|---|

| 1987 | First Distress Identification Manual released |

| 1989 | SHRP-LTPP Customer Support Services established |

| 1991 | SHRP-LTPP reference calibration procedure for FWDs developed |

| 1992 | First Distress Accreditation Workshop held in Reno, Nevada |

| 1992 | ProQual developed |

| 1993 | Original LTPP Materials Testing and Handling Guide published |

| 1993 | Original Traffic Software used to process traffic data |

| 1994 | Software Problem Report form formally adopted |

| 1995 | Operational Problem Report formally implemented |

| 1997 | Comparisons with distress maps from prior manual surveys required |

| 1998 | FHWA regional operations audits formalized |

| 1998 | Data Analysis/Operations Feedback Reports process started |

| 2002 | LTPP Traffic Analysis Software (LTAS) first used by regional support contractors |

| 2006 | LTPP Materials Testing and Handling Guide revised |

| 2012 | Traffic Data Collection and Processing Guide (v. 3) issued |

After the selection of data collection equipment and regional support contractors, before data collection began, three elements were identified as critical to providing a solid foundation for high-quality LTPP data. These elements are data collection standards, personnel requirements, and equipment requirements.

To promote uniform, consistent, and high-quality data, the LTPP program has published formal standards in the form of data collection guidelines, guides, and manuals that specify data collection frequencies, types of data collection, standard definitions, measurement procedures, test protocols, operation of electronic data collection devices, and data collection forms. These standards are implemented through the issuance of LTPP program directives, which present program policy to be followed by regional support contractors, materials laboratory contractors, and participating highway agencies. When guidelines are revised and updated, program directives are used to document changes in processes, procedures, equipment, and data collection activities.

A team process has been used to develop data collection guidelines. The process starts with the development of a draft guide by the central technical team. LTPP managers solicit review and comment from stakeholders—related expert task groups (ETGs), highway agencies, regional support contractors—on an initial draft. Feedback from the combined team obtained through written comments, teleconferences, Webinars, regional meetings, and national meetings is used to craft a proposed final version. The proposed guidelines are tested and refined through pilot activities and, when final, are issued through a formal LTPP program directive. Following implementation, data collection personnel can report equipment problems, issues with data collection forms, needed changes to procedures, and other issues using the formal Operational Problem Report (OPR) process. Changes and updates to the guidelines are also issued in a program directive.

|

|

|

| FIGURE 9.1. Data collection guidelines published as manuals for profile measurements and processing, distress identification, and falling weight deflectometer measurements. | ||

Some of the data collection guidelines have been compiled and published as manuals containing standards specific to the LTPP program. Examples include the highly requested Distress Identification Manual for the Long-Term Pavement Performance Program,1 the Long-Term Pavement Performance Manual for Profile Measurements and Processing,2,3 and the Long-Term Pavement Performance Manual for Falling Weight Deflectometer Measurements4 (figure 9.1). These publications, like most of the many other LTPP guides, manuals, and guidelines (some of which date back to 1988), have undergone major revisions and updates over the years. A list of the major LTPP data collection guidelines is presented in table 9.1.

In addition to providing standard definitions, measurement procedures, and data collection forms, guidelines also typically address equipment issues and, in the case of electronically collected data, software and data processing issues. For example, the latest version of the LTPP Manual for Falling Weight Deflectometer Measurements covers all aspects of measurement, from equipment setup through data handling procedures. Similarly, the LTPP Manual for Profile Measurements and Processing includes everything from equipment maintenance to inter-regional comparison tests and processing profile data in the office.

Data collection guidelines typically include and require the completion of data collection forms, whether data are collected using electronic means (which have comprised the bulk of LTPP data) or manual methods. These forms include written instructions on all requested pieces of information and pertinent information needed to complete the form. Also, all forms are numbered and dated for document control, and revisions to forms are issued by formal directives that document the changes. Most of the data collection guidelines within the LTPP program are implemented through formal issuance of directives.

| Release No. | Date* |

|---|---|

| Distress Identification Manual for the Long-Term Pavement Performance Studies | 1987 |

| SHRP-LTPP Data Collection Guide | 1988 |

| SHRP-LTPP Interim Guide for Laboratory Materials Handling and Testing | 1989 |

| Framework for Traffic Data Collection for the General Pavement Studies’Test Sections | 1989 |

| SHRP-LTPP Guide for Field Materials Sampling, Handling and Testing | 1990 |

| Specific

Pavement Studies Data Collection Guidelines for Experiment SPS-5: Rehabilitation of Asphalt Concrete Pavements |

1990 |

| Specific

Pavement Studies Data Collection Guidelines for Experiment SPS-6: Rehabilitation of Jointed Portland Cement Concrete Pavements |

1991 |

| Specific

Pavement Studies Data Collection Guidelines for Experiment SPS-7: Bonded Portland Cement Concrete Overlays |

1991 |

| Specific

Pavement Studies Data Collection Guidelines for Experiment SPS-1: Strategic Study of Structural Factors for Flexible Pavements |

1991 |

| Specific

Pavement Studies Data Collection Guidelines for Experiment SPS-2: Strategic Study of Structural Factors for Rigid Pavements |

1992 |

| Specific

Pavement Studies Data Collection Guidelines for Experiment SPS-8: Study of Environmental Effects in the Absence of Heavy Loads |

1992 |

| Manual for Profile Measurement: Operational Field Guidelines | 1994 |

| LTPP Seasonal Monitoring Program: Instrumentation Installation and Data Collection Guidelines | 1994 |

| SPS-2 Seasonal and Load Response Instrumentation, North Carolina DOT | 1994 |

| Data Collection Guidelines Under Less Than Ideal Conditions (Seasonal Monitoring Program) | 1995 |

| Specific Pavement Studies Data Collection Guidelines for Experiment SPS-9A: Superpave® Asphalt Binder Study | 1996 |

| Guidelines for IMS Data Entry for SPS-9 Project | 1997 |

| SPS-1, SPS-2 and SPS-8 Data Collection Guidelines for Experiments | 1997 |

| Guide to LTPP Traffic Data Collection and Processing | 2001 |

| LTPP Inventory Data Collection Guide | 2005 |

| Long-Term Pavement Performance Maintenance and Rehabilitation Data Collection Guide | 2006 |

| Guidelines for the Collection of Long-Term Pavement Performance Data | 2006 |

| Long-Term Pavement Performance Program Manual for Falling Weight Deflectometer Measurements | 2006 |

| Long-Term Pavement Performance Program Falling Weight Deflectometer Maintenance Manual | 2006 |

| Long-Term Pavement Performance Project Laboratory Materials Testing and Handling Guide | 2007 |

| LTPP Field Operations Guide for SPS WIM Sites (Version 1.0) | 2009 |

| LTPP Traffic Data Collection and Processing Guide (Version 1.3) | 2012 |

| Long-Term Pavement Performance Program Manual for Profile Measurements and Processing | 2013 |

| LTPP Information Management System (IMS) Quality Control Checks (updated annually) | 2013 |

| Distress Identification Manual for the Long-Term Pavement Performance Program | 2014 |

| *Year published. Earliest and most recent versions released are listed. Intermediate versions have been published. | |

Well-trained and knowledgeable data collection personnel are another critical element to achieving high-quality data. Accordingly, the data collection contractor selection process has included consideration of experience in operating various types of pavement data collection equipment, conducting field pavement data collection activities, or performing laboratory material tests, depending on the subject of the data collection contract. In addition, the LTPP program has established minimum criteria for data collection personnel, including previous experience in data collection, formal training with the data collection procedures using a training plan and syllabus approved by the LTPP program staff, and time spent assisting experienced personnel in performing data collection before doing so independently. Implementation of personnel requirements within the LTPP program is accomplished contractually with the data collection contractors or through formal program directives.5,6

In the case of pavement distress data, for example, an accreditation program was established in 1992, requiring that all pavement distress data be collected by accredited raters. The regional support contractor staff are required to attend the distress accreditation workshops held by the LTPP program to receive and maintain their accreditation. To be eligible to attend, raters first have to be trained to a high level of competence in the knowledge and procedures contained in the Distress Identification Manual using a combination of classroom and field training activities. The regional support contractors are required to provide formal training based on the FHWA National Highway Institute’s “Pavement Distress Identification Workshop” course. In addition, new raters are required to assist experienced personnel in performing distress data collection for asphalt and jointed concrete pavement (a minimum of two sections each) before becoming eligible to attend an accreditation workshop.7

The first distress accreditation workshops were held in 1992 in Reno, Nevada,8 and workshops were held at least annually early in the LTPP program. More recently, the workshops have been held less frequently due to budget limitations. The accreditation process consists of two major parts: a written examination to test the general knowledge of the rater and a field data collection or film interpretation examination to measure the rater’s capabilities in observing and recording distress data. Grading and accreditation are accomplished by comparing the rater’s results to the reference values established by a team of experienced raters.

FIGURE 9.2. Distress raters evaluate a pavement surface in an LTPP accreditation workshop, where raters’proficiency is tested. |

The distress accreditation workshops are intended for trained, experienced personnel only; they are not intended to train personnel. Their primary purpose is to assure high quality and uniformity in identifying pavement distress. Furthermore, to maintain their accreditation, distress raters have to meet minimum recertification requirements, including performing at least 15 surveys in a 12-month period—ideally evenly distributed throughout that time period—and successfully completing the accreditation workshop annually, or as required by the LTPP program. Figure 9.2 shows a distress accreditation workshop held in Columbus, Ohio, in May 2010.

Training sessions focused on the handling of weather and seasonal data were conducted as well. Personnel learned how to review the weather plots and identify and edit erroneous data collected from the automated weather stations, and they were trained in data processing QC procedures for the Seasonal Monitoring Program.

To promote consistency in data collection across the test sites, national and regional meetings of regional support contractor staff as well as teleconferences involving data collection personnel are conducted on each major data collection topic. These interactions, which vary in frequency according to the need and the maturity of each data collection activity, allow for the exchange of ideas and improvements as well as the discussion and resolution of issues.

In addition, the regional support contractors’QC plans, mandated by the LTPP program, include processes for training new staff and reviewing the performance of existing staff, including some exchanges of equipment operators between the regions to review each other’s efforts. The training requirements within these QC plans address not just the distress data collection, but all collection activities such as FWD, profile, and the Seasonal Monitoring Program. (Regional contractors’QC plans are addressed later in this chapter, along with FHWA QA audits.)

| Georgia faultmeter |

| Rod and level |

| Seasonal Monitoring Program Mobile Unit (CR10 data logger, Tektronix time domain reflectometer cable tester, electrical resistivity function generator, and digital multimeters) |

| Megadac (dynamic load strain sensors, stress sensors, and displacement sensors) |

| Profilometers (laser sensors, accelerometers, data collection triggers, distance-measuring instrument, surface temperature sensors, macrotexture measuring lasers) |

| Dipstick® profile measuring devices |

| Falling weight deflectometers (air and surface temperature sensors, load plates, load cells, deflection sensors, high-accuracy distance-measuring instrument) |

| Climate and subsurface monitoring equipment (temperature sensors, rain gauges, subsurface temperature probes, moisture probes, frost/thaw probes, piezometers) |

| Traffic monitoring equipment (piezo electric sensors, inductive loops, pneumatic tubes, radar or infrared beams) |

| Materials testing equipment (closed-loop servo hydraulic test system—load frame, load cells, hydraulic system, deformation devices, triaxial pressure chamber, temperature chambers, computer, dynamic cone penetrometer) |

| Weigh-in-motion equipment (bending plate, load cell, and quartz sensor) |

In developing the LTPP data collection plans, it was determined that the program should own and operate specialized data collection equipment to best achieve the program’s objectives. However, some data collection services were engaged due to consideration of the equipment cost, advanced operating requirements, or volume of data measurements to be performed. Contracted services have been used to collect photographic distress data, perform laboratory materials tests, and take ground penetrating radar measurements. The LTPP program has acquired FWD, inertial profiling, manual distress survey, dynamic load response, seasonal monitoring, and traffic data collection equipment.

LTPP data collection activities rely on equipment that is often of a fairly sophisticated nature, which has been built using technologically advanced components to meet the program’s specifications (table 9.2). The basic inertial profiler components, for example, include laser sensors, accelerometers, data collection triggers, a distance-measuring instrument, and a computer system that combines the measurements from each of the components to provide the longitudinal profile data. In 2013, texture measurements, surface temperature, GPSr (Global Positioning System receiver), and photo and video capabilities, were added.

Because properly functioning equipment is critical to achieving high-quality data, the maintenance, repair, checks, and calibration of equipment used in data collection have been an integral part of LTPP QC/QA efforts since the program’s beginning. Most data collection guidelines address equipment maintenance, repair, checks, and calibration procedures, and each regional support contractor is required to establish and follow an equipment preventive maintenance plan that is accepted and monitored by LTPP program staff. Throughout the course of the program, many directives have been issued that address specific maintenance checks and calibration procedures for LTPP data collection equipment.

DAILY CHECKS OF INERTIAL PROFILERSFor inertial profilers, it is imperative that the individual components as well as the overall system be calibrated, where possible, to National Institute of Standards and Technology standards. In those cases where it is not possible to directly calibrate a component, checks are carried out to insure proper function. For example, these checks on the LTPP inertial profiler components are performed each day before data collection:

|

Calibrations and checks are performed on all data collection equipment to insure proper functioning before they are used in the field or laboratory. In those cases where it is not possible to calibrate a device, equipment checks are used. For example, temperature sensors (thermistor probes) in the Seasonal Monitoring Program were placed in substances of known temperature such as ice and boiling water. If the sensors were found to be outside an established range, they were either returned to the manufacturer for adjustment or replacement.

Although the LTPP program uses existing technology to the extent that is practical, in some cases it has had to develop calibration and check procedures for program equipment. For instance:

A number of software programs have also been developed and implemented to help identify potential equipment problems and to check calibrations. Examples include:

FIGURE 9.3. Profiler rodeo held at the Minnesota Road Research Project (MnROAD) site in Albertville. From May 14 to 18, 2007, researchers performed a comparison test of the profilers used by the four LTPP regional support contractors. |

Another important concept, introduced by the LTPP program in the early 1990s, was the use of equipment comparisons, which are referred to as “FWD thump-offs” and “profiler rodeos.” Such comparisons were used not only as a means of accepting equipment for program use, but also for performing periodic evaluations of the equipment’s operational status and for cross-training exercises. For example, upon delivery of the profilers now in use, a stringent acceptance procedure was conducted in February 2013 on reference test sections in College Station, Texas, and a week-long training was held in April 2013.14

In the profiler rodeos, the LTPP profilers were compared against each other as well as against a reference device, the Face Dipstick and rod and level, to ensure that the equipment was collecting high-quality profile data (as described in chapter 6). These rodeos required the establishment of multiple test sections. The profile of each test section was then measured by the Face Dipstick and profilers, and the resulting data were analyzed to determine compliance with established elevation bias and precision criteria along with the International Roughness Index (IRI) values.15 Figure 9.3 shows four profilers prepared for testing at a 2007 rodeo in Minnesota.

Ultimately, it is the responsibility of the regional support contractors to maintain the data collection equipment they use in good-working, calibrated condition. This equipment has included devices such as the levels used for surface elevation surveys and pavement and air temperature probes used in conjunction with FWD testing and profile surveys. Furthermore, as noted earlier, each regional support contractor is required to establish and follow an equipment preventive maintenance plan as accepted and monitored by LTPP program staff.

While the data collection guidelines, personnel requirements, and equipment requirements described in the previous section provide an excellent foundation, additional QC elements are needed to assure that data are of high quality. Key elements to be considered during data collection are the ambient conditions, monitoring of data collection activities in the field by responsible personnel, and the use of QC tools to check equipment and data in the field.

Ambient conditions must not be neglected when planning for or performing data collection because they can significantly affect data quality, as shown in these LTPP-specific examples:

These ambient condition considerations led to the issuance of formal LTPP program directives, such as these:

Most of these directives have been incorporated into the latest versions of the data collection guidelines discussed in the previous section.

Reducing errors at the time of testing, when it is possible to correct a problem, is a priority within the LTPP program. It is standard procedure for the regional support contractor’s staff carrying out data collection activities to follow program guidelines and to continuously monitor the data being collected to ensure that the equipment is functioning properly and the data appear reasonable. If either questionable data or improperly functioning equipment is suspected during data collection, activities are suspended until the issue in question is resolved.

In the case of field activities, data collection personnel are required to survey the test section area to ensure there are no obstacles or debris on the pavement surface that could affect data collection. They are also required to check and to ensure that the start and end of each test section are well defined so that measurements are taken at the appropriate locations.

Once data collection activities begin, a number of monitoring tools are available to determine whether the data appear reasonable and the equipment is functioning properly. For example, cameras are installed on all LTPP FWD units to aid in establishing the accurate location for joint deflection tests on rigid pavements. Another example is the automated data checks contained in the LTPP FWD data collection software, which includes the following error conditions:

If deflection errors occur, the operator is required to attempt to identify the source of those errors. If the errors are determined to be due to problems with the FWD equipment, the problems have to be fixed before testing continues. If the errors are related to localized pavement conditions, the operator is required to reposition the FWD and comment on the condition. If the errors are determined to be due to pavement conditions that are representative of the test section as a whole or due to factors such as truck traffic that are out of the operator’s control, then the operator is required to accept the error and comment on the condition.

As described, there are many valid reasons why some of these “errors” may actually be an accurate reflection of pavement conditions, such as a transverse crack between sensors leading to a nondecreasing deflection warning. This is another example of why the LTPP program stresses the importance of using trained and experienced field personnel.

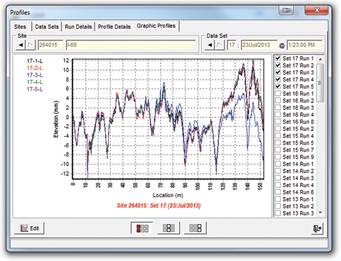

Once data collection has been completed, field personnel are required to review the collected data prior to leaving the site. In the case of longitudinal profile data, for example, profiler operators use the LTPP ProQual software (discussed in chapter 6) to evaluate the acceptability of the profile runs based on established criteria. ProQual uses collected profile data to compute IRI values for the left and right wheelpaths, as well as the average IRI of the two wheelpaths (figure 9.4).

Profiler operators are also required to use ProQual to perform a visual comparison of profile data collected by the left, right, and center sensors for one profile run. As a further check on the data, the operator compares the current profile data with those obtained during the previous site visit. If there are discrepancies between profiles from the current and previous site visits, the operator is required to investigate whether these differences were caused by equipment problems or were due to physical pavement features such as those caused by maintenance or rehabilitation activities.

FIGURE 9.4. ProQual software, used to evaluate the acceptability of profile data. |

After the profile visual comparison is completed, an IRI comparison of current versus previous site visit data is also performed. If IRI values from the most recent profiler runs meet the LTPP criteria and the operator finds no other indication of errors, no further testing is needed at that site.

Other examples of automated field data collection QC tools used within the LTPP program include the following:

There are also instances within the LTPP program where data collection is not automated, as is the case with manually collected distress data. In those instances, efforts were made to develop and implement procedures that ensure consistent and uniform data. In the case of the manual distress surveys, for example, the regional support contractors were required to run the DiVA (Distress Viewer and Analysis) software on each section’s data after the survey as part of the office review to identify inconsistencies among previous surveys. (The DiVA software is described in greater detail later in this chapter.) Furthermore, regional support contractor staff in the field are required to comply with the following policy:

The intent of this policy is to promote consistency, not to perpetuate errors. The rater is required to note disagreements with the prior map information and bring any issues to the attention of the office reviewer for resolution. In addition, inconsistencies with prior surveys are required to be documented by the rater using video or photographs.

After data have been collected, the regional support contractors carry out numerous quality checks before entering the data into the database. These checks range from basic—are profile data files readable and complete, is the manual distress data package complete, are survey date stamps correct—to sophisticated, as explained below. The primary objective of these data checks is to prevent “bad” (i.e., erroneous) data from being entered into the database. Examples of the data check tools developed by the LTPP program to avoid loading bad data into the LTPP database include:

Electronic data obtained from third parties are also evaluated and subjected to automated data checks before entry into the LTPP database. Two of the largest modules of data from other noncontract agencies are traffic monitoring data and climate data. The following explains the data checks that have been used by the LTPP program for these data:

MIGRATION TO LTAS: EXAMPLE OF LTPP-DEVELOPED QUALITY CONTROL SOFTWAREThe LTPP Traffic Analysis Software (LTAS) provides multiple functions for evaluating and reviewing traffic data collected at the LTPP test sites by highway agencies and LTPP contractors. LTAS provides both graphical and automated range checks and statistical checks to identify suspect, invalid, duplicate, and erroneous traffic load and classification data. Early in the program, each LTPP regional support contractor used in-house developed software to check the files received from highway agencies. These checks included detection of issues related to date gaps, lane identification, direction, station identification, start and end dates, times, left justification, optional agency data, blank lines, invalid line lengths, and the like, as well as sorting of the data. The files received were compared with the forms for classification and weight files submitted by the participating highway agency. Data identified as suspect were returned to the agencies for review and comment. Data identified as erroneous through this process were purged from the system before generation of annual traffic estimates. Later in the program, LTAS was developed to ensure uniformity and consistency of processing traffic data between LTPP regions and to provide detailed QC checks of the data prior to making them available to the public. LTAS is used to perform a detailed review that is used to identify data that are atypical for the time period and test site. The software currently performs quality checks on automated vehicle classification (AVC) and weigh-in-motion (WIM) data, which are output graphically. These checks include: AVC Data:

WIM Data:

Comparison of AVC and WIM Data:

In addition to the QC graphs, LTAS has reports that can provide assistance in looking at the data to determine its accuracy. Some of these reports are:

The three Annual Summary Reports include monthly data, monthly statistics, and error summaries. Since LTAS was first implemented in 2002, the software has evolved and continues to evolve to enable provision of high-quality data to LTPP data users. |

FIGURE 9.5. The Traffic Monitoring Guide, published by FHWA’s Office of Highway Policy to assist States with collecting and submitting traffic data in a uniform format to FHWA. |

As data are entered into the database by the regional support contractor, they undergo a hierarchy of automated, progressive QC checks. The results of these checks are recorded in the record status field. Every data record in the database starts with a record status of A. When a data record with a status of A passes all level B checks, it is assigned a status of B, and so on. (Chapter 8 provides more detail about the different quality checks performed on the data.) Over time most of the level-B checks were removed, which left three major types of database QC checks:

The QC level checks are performed sequentially. Level D checks are applied only to records passing level C checks, and level E checks are applied only to records passing level D checks. Record statuses of A and B are used for data that either have not undergone QC check processing or have not passed the level C checks. If a record fails a check, its record status remains at the next lower status. For example, records failing a level D check have a status of C. Alternatively, the record status can be manually upgraded if the record has been examined and has been found to be acceptable.

Records with level E status could mean either of the following:

In either case, level E records may contain errors that have not been detected by the data review process.

On the other hand, records with a non-level E status could be interpreted as any of the following:

It is important to note that data users assume responsibility for conclusions based on their interpretation of the data collected by the LTPP program. Level E data should not be considered more reliable than non-level E data. Likewise, non-level E data should not be considered less reliable than level E data. The record status for non-level E data can be used as a relative indicator of potential issues or pavement anomalies that might exist for these data.

It was not practical, particularly when considering the wide variety of materials, climatic conditions, and local practices, to inspect all of the data for all types of potential anomalies. As the program evolved and improvements were made to the data QC checks, level E data included in previous releases may have been reclassified. It is also important to note that the LTPP program works diligently to identify and address critical data errors.

Likewise, it is important to recognize that the program has established standard and formal methods for the correction of data errors identified from the database checks. It is the LTPP program’s policy to not load known “bad” data into the database, which is why the automated data check processors and screening methods were developed. Although steps are taken to prevent entry of erroneous data, with such vast quantities of data coming from multiple sources, there are instances when data in the database are still found to contain typographical errors.

When data errors are identified, the standard response is to correct the error if possible, to remove the data from the database, or to place the data at a lower data quality status. Error correction procedures are transmitted by LTPP program directives such as “Manual Upgrades to QC Checks,” which describes the steps to take when data fail an automated check.22

During the QC error resolution process, errors that are not possible to rectify are also identified. Some examples include:

The above database checks and review guidelines apply to data after entry into the LTPP database. Error correction guidelines are also contained in the field data collection and processing documents.

After the data have been entered into the LTPP database and have undergone checks by the regional support contractors, the technical support services contractor’s staff reviews the contents of the entire database before releasing data to potential users. See chapter 8 for illustrations of the data flow and QC checks at different points in time. Data problems identified following release are documented using the Data Analysis/Operations Feedback Report (DAOFR) process, which is discussed later in this chapter.

There are two types of QA procedures the LTPP program follows to maintain a high quality level in the database. These efforts, performed by the program staff, the technical support services contractor, and the regional support contractors, are discussed below.

Since the early days, the LTPP program has performed QA audits of data collection contractor activities, including those of the regional support contractors, materials testing laboratories, central pavement distress data contractor, and FWD calibration centers. However, those audits were not formalized until 1998 through “LTPP Regional Operations Quality Assurance Reviews,” a program directive that enhanced and further standardized the data collection process used by the regional contractors.23 This directive required, on a routine basis, the conduct of QA reviews of the regional data collection contractors in the areas of automated weather stations, distress, FWD, profile, and the Seasonal Monitoring Program.

After implementation of the directive, multiple QA reviews of regional support operations were conducted each year by teams consisting of four highly qualified individuals, typically including one from the LTPP program, two from the technical support services contractor, and one from a regional support contractor other than the one under review. These teams reviewed field and office operations during the course of their visits, sometimes together and at other times independently. Members of the review team were not allowed to interact with data collection or office personnel during the conduct of their activities; the reviewers could only observe and take notes.

Regional contractors were rated by the review team as being “Fully Compliant,” “Compliant—Minor Changes Required,” or “Non-Compliant—Major Changes Required,” depending on how closely LTPP guidelines and directives were followed. Individual personnel were also given a “Compliant” or “Non-Compliant” rating on the basis of the review results. In the event that one or more individuals were found to be “Non-Compliant,” the contractor was required to submit a remediation plan to the LTPP program office within 15 days of receiving such a rating. A follow-up review of “Non-Compliant” personnel was conducted within 6 months after the initial review and if at that time the individuals were still rated “Non-Compliant,” they were not allowed to collect data for the LTPP program until they were found to be compliant at a later review. Beginning in December 1998, only contractor personnel rated “Compliant” were permitted to collect LTPP data.

QA audits were also performed on FWD reference calibration facilities used by the program and operated under cooperative agreements with selected highway agencies. The facilities used LTPP-provided equipment and followed LTPP test protocols. Annual audits were performed in conformance to test protocols, audit results were documented, and certificates of compliance were issued.

QA audits for materials testing were performed independently by the American Association of State Highway and Transportation Officials (AASHTO). The LTPP program required that the materials testing contractors be certified by AASHTO standards.

In 2001, LTPP’s approach to QA audits was significantly revised with the re-letting of the four regional LTPP data collection contracts. As part of those contracts, FHWA required the regions to formally document their data collection and processing QC systems in accordance with ISO (International Organization for Standardization) 9001 quality management systems accreditation standards. The documents were referred to as “Regional Operations Quality Control and Data Flow Plans,” as described in the following section. Some of the relevant features of this management process include:

– Conducting both regularly scheduled and impromptu internal audits of compliance with QC, data collection and data processing guidelines, and procedures.

– Documenting internal audits.

– Documenting corrective actions resulting from internal and external audit findings.

– Conducting annual, or more frequent, reviews and updates of the QC and management procedures.

– Announced field or office visits to review designated sections of the QC and management plans. The different parts of the plans are rotated so that each part is reviewed on a 2-year cycle, budget permitting. Prior to an audit, a data review is conducted to identify data issues of concern to be investigated during the audit.

– Unannounced audits of field data collection or office personnel. Auditors arrive unannounced to observe activities and compliance with both the contractor’s internal requirements and the LTPP program’s requirements.

– A documented audit report that includes a description of audit activities, items reviewed, positive findings, corrective action requests, and improvement recommendations. All corrective action requests and improvement recommendations are discussed with the regional support contractor in order to reach an agreement on corrective actions to be taken. On each audit visit, all corrective action findings and improvement requests previously agreed to are reviewed.

Each region developed a formal Regional Operations Quality Control and Data Flow Plan circa 2001. These QC plans specified data collection, processing activities, data flow, and roles, responsibilities, and qualifications of staff members by position. QC activities were put in place to provide formal checks to identify bad, erroneous, and missing data prior to entry into the database. The regional contractors submitted QC and data flow plans for the following data elements:

Plans varied among the regions; for example, the North Atlantic Regional Support Contractor developed the first LTPP QC plan for the automated weather station. Initially this region prepared a separate document for each data element, but later combined these into one document. In contrast, the Western Regional Support Contractor prepared a single document covering the QC processes for all of the data elements they collected and processed.

In 2004, the regional support contractors developed Working Guides as a means of standardizing regional procedures and ensuring consistency in training, field data collection, maintenance, calibration, and the processing and uploading of data. The purpose of these guides was to ensure compliance with the Data Quality Act.24 In the North Atlantic Region, individual Working Guides were issued for each data element (like the initial QC and Data Flow Plans).

Although the QC processes discussed in the previous sections of this chapter can identify a wide array of data issues or problems, they cannot identify all of the potential data problems. Once these initial QC efforts have been completed, higher order data checks and reviews are used to identify other types of data issues. Important higher order review activities within the program include time-series checks, data studies, and engineering data analysis techniques. The term “data study” was introduced into the LTPP lexicon to indicate intensive higher order data reviews that employ a variety of analysis techniques performed against the entire LTPP data set and data subsets. While not in explicit alignment, these data studies tend to serve the same purpose as the level 2 QC checks described in the Strategic Highway Research Program (SHRP) 5-year report on the LTPP Information Management System (IMS).25 The final tier of higher order data checks comes from the results of formal analysis of LTPP data.

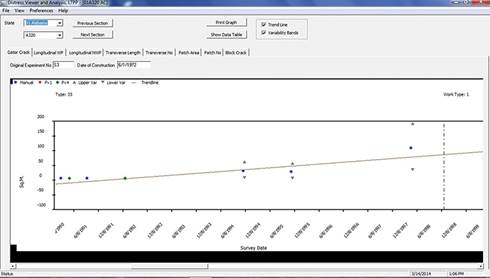

An important higher order review activity performed within the LTPP program is the time-series review of pavement section performance data. In the case of distress data, for example, once data have been uploaded to the database, time-series checks are performed using the DiVA program. This software automates extraction, compilation, and time-series review of distress data from the database. The key subset of distresses included in the time-series review by pavement type is summarized below by pavement surface type:

The DiVA software produces a graph of the selected distress versus time for each section and distress type, starting from the time the test section was included in the LTPP program. An example is shown in figure 9.6. The graph includes both photographic and manual distress data for each test section along with error bands around the individual data points and a trend (best-fit) line to aid in the review. The variability bands are applied to the total quantity of distress—the sum of quantities over all severity levels. The upper and lower limits of each distress represent three standard deviations. Ninety-nine percent of the data from a given data set are expected to fall within three standard deviations of the mean of that data set.

Actions resulting from the time-series review vary from data being acceptable (most common outcome), to identification of issues that may be caused by correctable items such as unreported maintenance or rehabilitation activities on the test section (most common issue), to correction of individual distress data classification issues (infrequent occurrence).

FIGURE 9.6. A distress-versus-time graph produced by the DiVA software. |

In addition to the time-series checks, another important higher order review activity within the program is the conduct of data studies using data extracted from the database. Data studies are also used to develop, refine, or implement new or advanced data collection systems. Some examples of data studies conducted by the LTPP program include:

FWD calibration center pooled-fund study—A State-sponsored pooled-fund study, managed by the LTPP program, was established to investigate and improve the LTPP-developed FWD calibration protocols. The purpose of the study was to evaluate current methods, procedures, and instrumentation and develop an improved system compatible with current computer technology.30

The last tier of LTPP higher order review activities is associated with the performance of formal data analysis projects. A multiproject approach, by topic area, is used for analysis of LTPP data. The LTPP program, other FHWA program offices, the National Cooperative Highway Research Program, and the States have sponsored many research analysis projects that have used LTPP data. More detailed information concerning LTPP data analysis efforts is provided in chapter 10.

LTPP-sponsored analysis projects are primarily intended to yield insights, benefits, and products from analysis of the data. A fundamental requirement of any research investigation is evaluation of the empirical data used in the investigation. In recognition that the operational structure of the LTPP program serves to provide relevant data of known quality for achievement of program goals through analysis of the collected data, the DAOFR process, discussed below, was established in 1998.31 Over time, the DAOFR process has been used to report suspect data issues discovered in data analyses and studies by users both within and outside the LTPP program.

An integral component of LTPP’s data quality approach is extensive peer review, both external and internal, of the various program areas and activities. At the start of the program, an external peer review process was created to monitor the status and progress of the LTPP studies and provide technical assistance to SHRP and later to FHWA, as discussed in chapter 2 and appendix A. The TRB LTPP Committee and its ETG structure incorporated experts and practitioners from the highway pavement community and provided advice regarding the technical and managerial direction of the LTPP program. National outreach stakeholder meetings such as the 1990 SHRP Assessment Meeting in Denver, Colorado; 1996 SHRP LTPP National Meeting in Irvine, California; 2000 LTPP SPS Workshop in Newport, Rhode Island; and 2010 LTPP Pavement Analysis Forum in Irvine, California, provided additional external peer review input on LTPP program activities.

Internal peer review is also a major contributor to high-quality data and the program’s success. Internal peer review touches on every element of the program, including the various QC/QA activities described in this chapter.

A few highlights of LTPP peer review activities, both external and internal, are summarized below:

Furthermore, to allow input from both external and internal sources, better serve its customers, and determine customer satisfaction, the LTPP program provided customer support services and customer survey processes starting in 1989. In 2006, FHWA moved the formal LTPP Customer Support Service Center to its Turner-Fairbank Highway Research Center, in McLean, VA.

Another important data quality element is the feedback process. Initial LTPP feedback efforts were mostly limited to national and regional meetings involving LTPP State Coordinators and regional support contractors. Teleconferences were also held with the data collection staff from the regional support contractor offices. These meetings and conferences were scheduled as needed and allowed problems, ideas, and solutions to be shared among all participants. Later, more formal procedures (OPRs, DAOFRs, and SPRs) were established to ensure that the feedback processes contribute toward the improvement of data quality and to the LTPP program’s success.

The first LTPP feedback mechanism, formally implemented by policy in 1995, was the OPR form, which enables personnel in the regions to report problem issues—equipment, software, and procedural problems associated with data collection, and, in particular, uncommon circumstances. This feedback mechanism provides a uniform way of reporting, handling, and tracking problems associated with the LTPP automated weather station, distress, FWD, profile, and seasonal monitoring data. Over time, LTPP program directives were issued extending and formalizing the OPR process to different data collection areas, such as materials sampling and testing.

A log of the OPRs is used to track the circumstances of the problems and their resolution. OPRs are reviewed, analyzed, and discussed by the LTPP program and contractor staff during teleconferences, and resolutions are provided by the regional support or technical support services contractors. Quarterly updates are sent to the program office and regions.

The OPR feedback process has resulted in several major benefits: standardizing the process for submitting problems associated with data collection activities; simplifying tracking when a problem was submitted, who was responsible for resolving it, whether or not it had been resolved, and how and when it was resolved; and reducing the probability of issues being forgotten or resolutions not making it back to the problem’s initiator. More than 400 OPRs had been submitted and resolved by 2014.

The second LTPP feedback mechanism formally instituted by policy (in 1998) was the DAOFR process,33 which enabled users of the database to report issues encountered during data analysis that suggest or demonstrate the need for corrective actions or further investigation (figure 9.7). In an effort to examine all data in the database, the program developed a series of DAOFRs for use by the regional support contractors and data analysts to provide commentary and to load, edit, fix, clean, or remove any suspect data collected by the regions or received from the highway agencies. The approach to completing DAOFRs varies for each data collection element. For example, the Traffic DAOFR process started with the out-of-study sites (sites that were no longer being monitored) as well as the tables that had been completely populated (no new data were expected) or were part of the module closeouts. Data for active SPS and General Pavement Study sites were examined next.

The DAOFR form and DAOFR resolutions are available to LTPP data users online.34 Situations in which use of the DAOFR process is appropriate include the absence of critical data for specific test sections; data that appear to be incorrect, contradictory, or otherwise suspect; data not collected but necessary to fill voids identified in the analysis; and recommendations arising from the analysis regarding data collection procedures that can be improved. By 2014, close to 1,400 DAOFRs had been submitted.

FIGURE 9.7. LTPP Data Analysis/Operations Feedback Report, used to provide feedback to the program regarding data that require investigation or correction. |

An early program feedback effort was the implementation of the LTPP database SPRs, which the contractors used to report problems, comments, change requests, and document changes made to the database management software. Although suggestions for software diagnosis and correction are often provided via phone calls and emails, actual diagnosis (by re-creating the conditions and modifying the software) occurs via the SPR process. An SPR submitted on a specific version of software includes a concise description of the problem and relevant extractions of test section information.

The SPR process was put in place in the early 1990s, and an SPR form was adopted to report issues with the traffic software in 1996,35,36 but the SPR process was not formally implemented by policy until 2005.37,38 In 2011, the SPR form was Web-enabled.39 In all, more than 4,300 pavement performance database and traffic SPRs have been submitted.

Another important approach to data quality that was implemented by the LTPP program in the early years was to adopt existing concepts in other large databases to maintain consistency across the database. Such adoptions include the following:

Still another data quality approach implemented from 2006 to 2008 was the self-assessment of the program relative to its compliance with U.S. Department of Transportation Information Dissemination Quality Guidelines, and the resulting report, as discussed in chapter 8.40,41

LTPP QC/QA practices have resulted in a high-quality pavement performance database. The program’s quality expectations have created an environment that promotes advancements in QC processes to help in understanding pavement performance. The program has developed an extensive and robust set of modern pavement engineering QC methods and tools, several of which have been adopted by the pavement engineering community. These and other products are discussed in the next chapter. The next section also includes chapters on lessons learned and plans for the future.